Born to be cloud

Creating robust digital systems that flourish in an evolving landscape. Our services, spanning from Cloud to Applications, Data, and AI, are trusted by 150+ customers. Collaborating with our global partners, we transform possibilities into tangible outcomes.

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

Experience our services.

We can help to make the move - design, built and migrate to the cloud.

Cloud Migration

Maximise your investment in the cloud and achieve cost-effectiveness, on-demand scalability, unlimited computing, and enhanced security.

Artificial Intelligence/ Machine Learning

Infuse AI & ML into your business to solve complex problems, drive top-line growth, and innovate mission critical applications.

Data & Analytics

Discover the Hidden Gems in Your Data with cloud-native Analytics. Our comprehensive solutions cover data processing, analysis, and visualization.

Generative Artificial Intelligence (GenAI)

Drive measurable business success with GenAI, Where creative solutions lead to tangible outcomes, including improved operational efficiency, enhanced customer satisfactions, and accelerated time-to-market.

.png)

.png)

Awards and Competencies

.png)

.png)

.png)

.png)

Ankercloud: Partners with AWS, GCP, and Azure

We excel through partnerships with industry giants like AWS, GCP, and Azure, offering innovative solutions backed by leading cloud technologies.

Countless Happy Clients and Counting!

.png)

.png)

Check out our blog

Migrating a VM Instance from GCP to AWS A Step by Step Guide

Overview

Moving a virtual machine (VM) instance from Google Cloud Platform (GCP) to Amazon Web Services (AWS) can seem scary. But with the right tools and a step by step process it can be done. In this post we will walk you through the entire process and make the transition from GCP to AWS smooth. Here we are using AWS’s native tool, Application Migration Service, to move a VM instance from GCP to AWS.

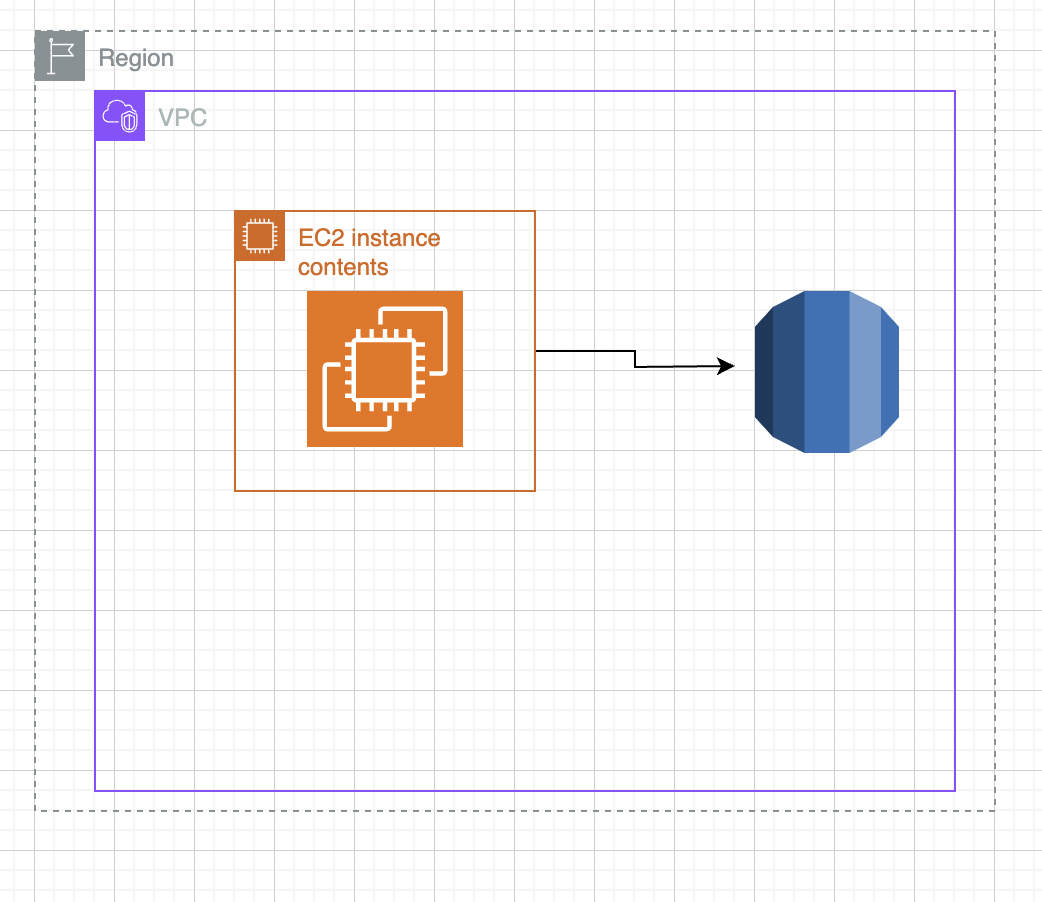

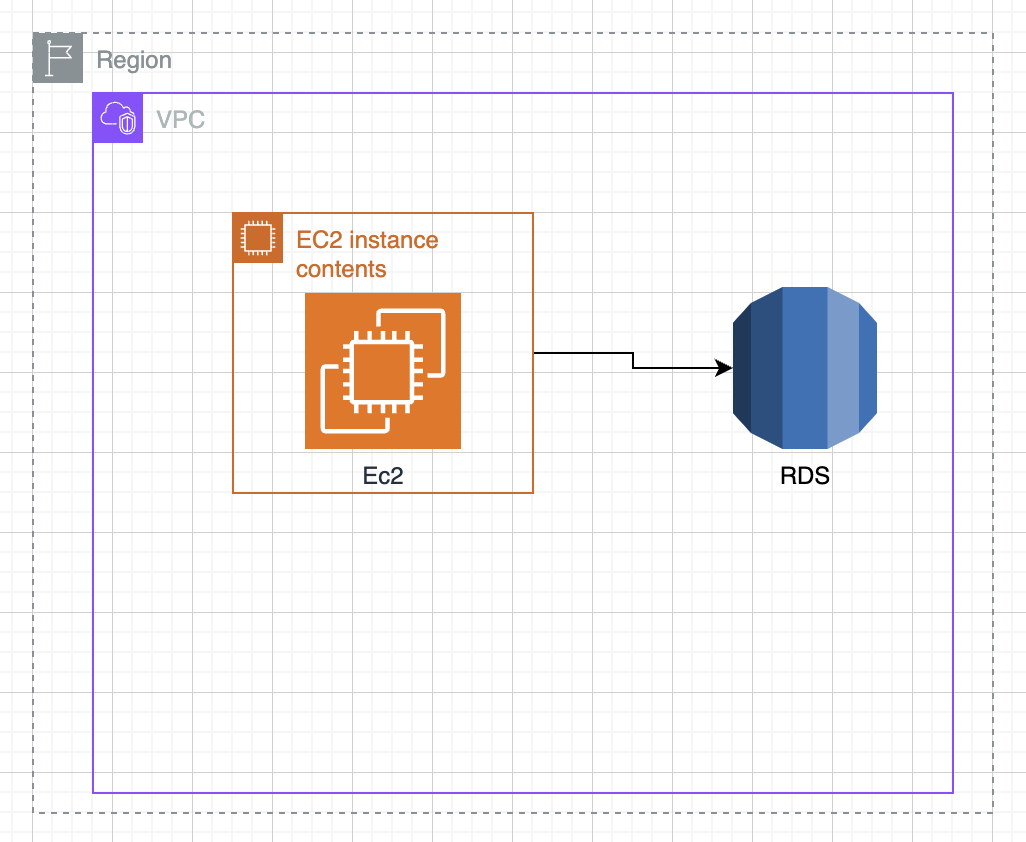

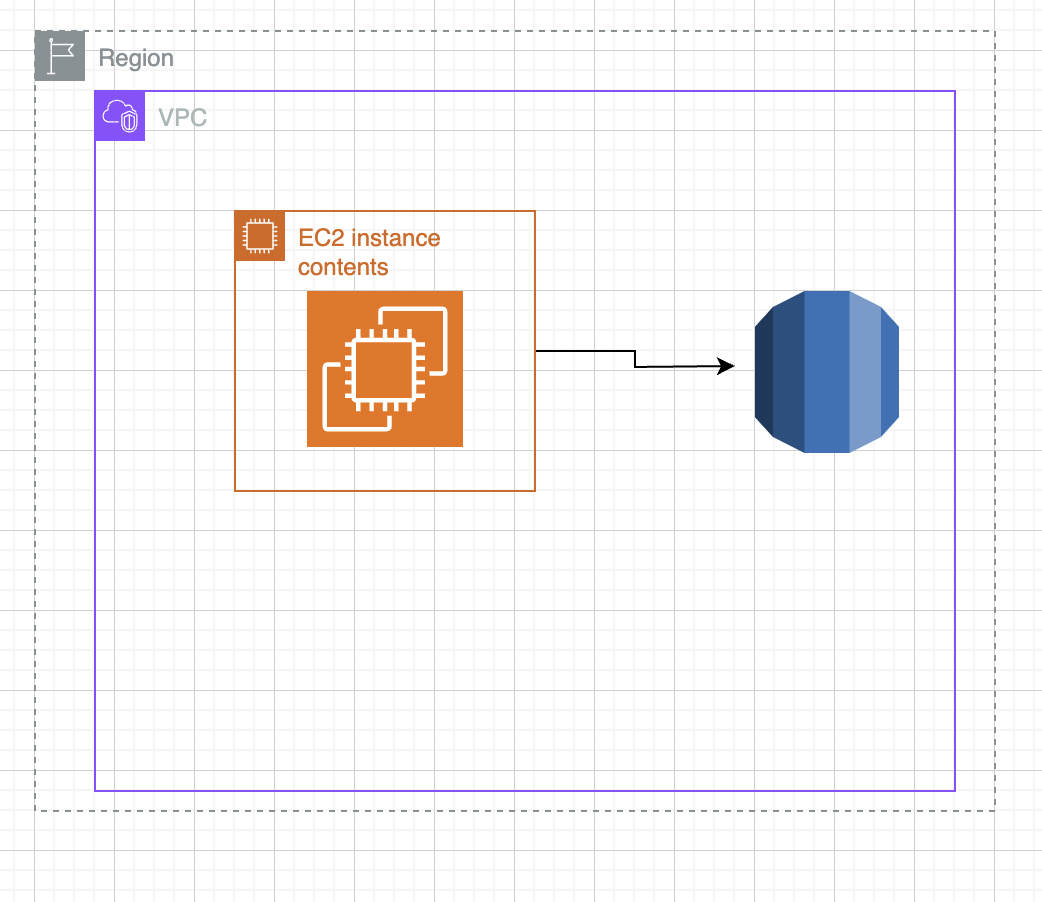

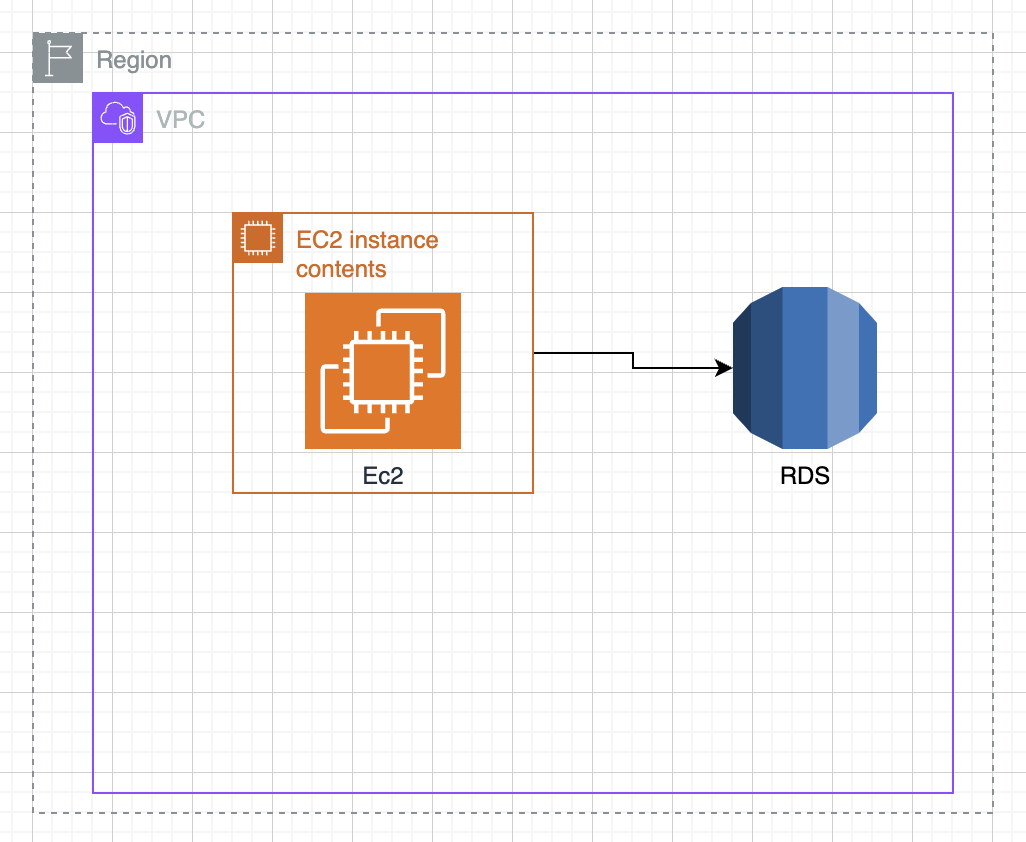

Architecture Diagram

Step-by-Step Guide

Step 1: Setup on GCP

Launch a Test Windows VM Instance

Go to your GCP console and create a test Windows VM. We created a 51 GB boot disk for this example. This will be our source VM.

RDP into the Windows Server

Next RDP into your Windows server. Once connected you need to install the AWS Application Migration Service (AMS) agent on this server.

Install the AMS Agent

To install the AMS agent, download it using the following command:

For more details, refer to the AWS documentation: https://docs.aws.amazon.com/mgn/latest/ug/windows-agent.html

Step 2: Install the AMS Agent

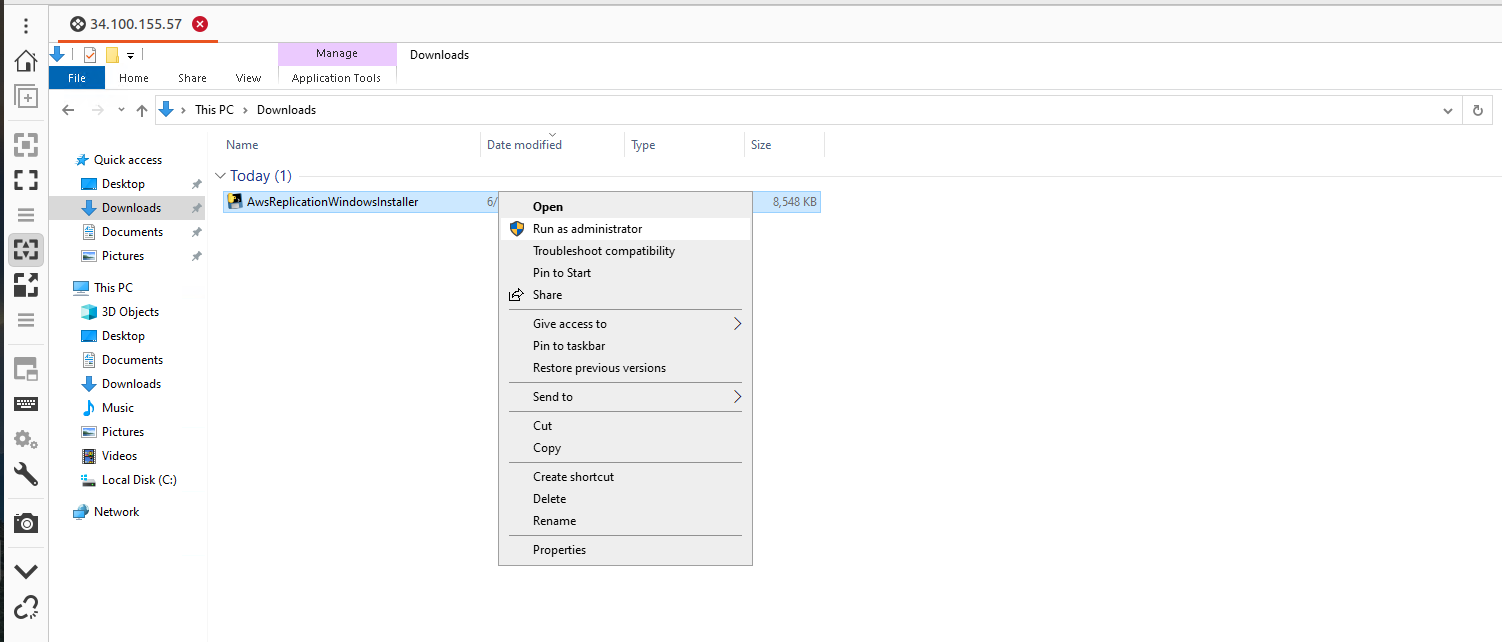

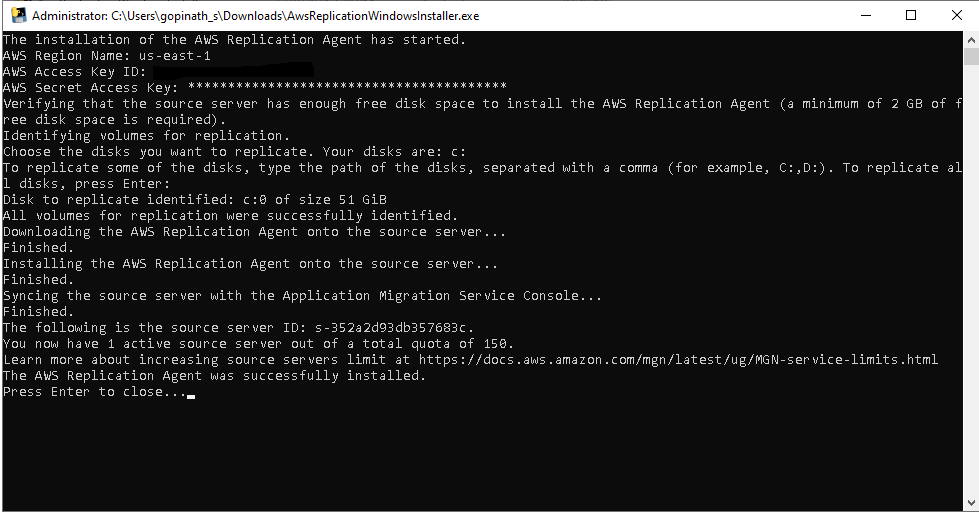

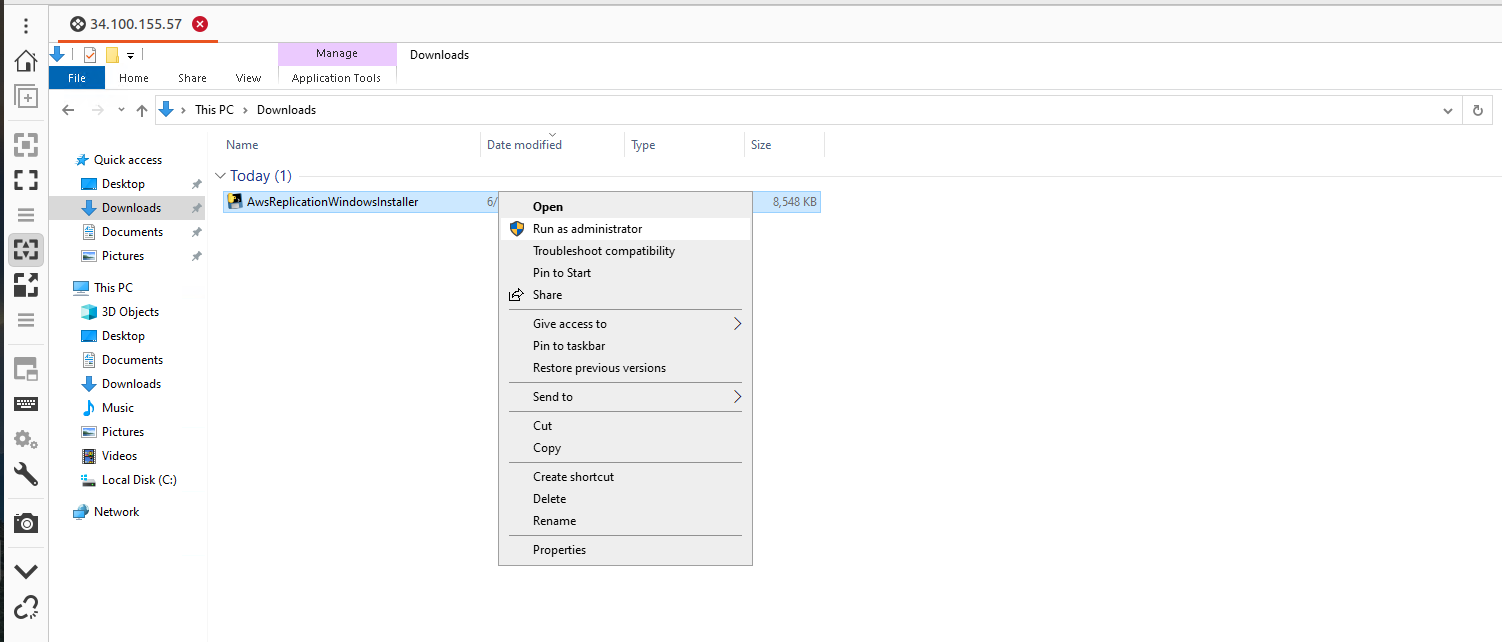

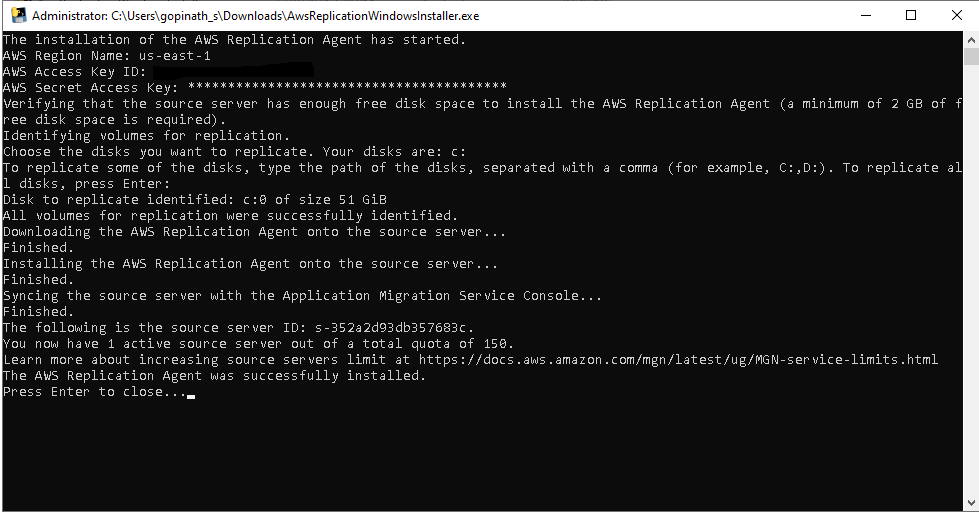

Navigate to the Downloads folder and open the AWS agent with administrator privileges using the Command prompt.

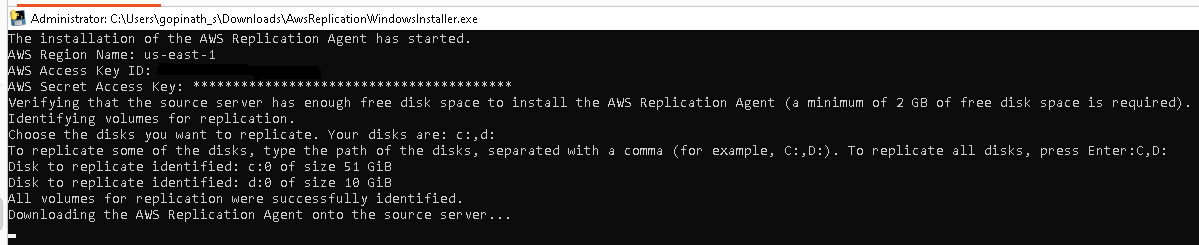

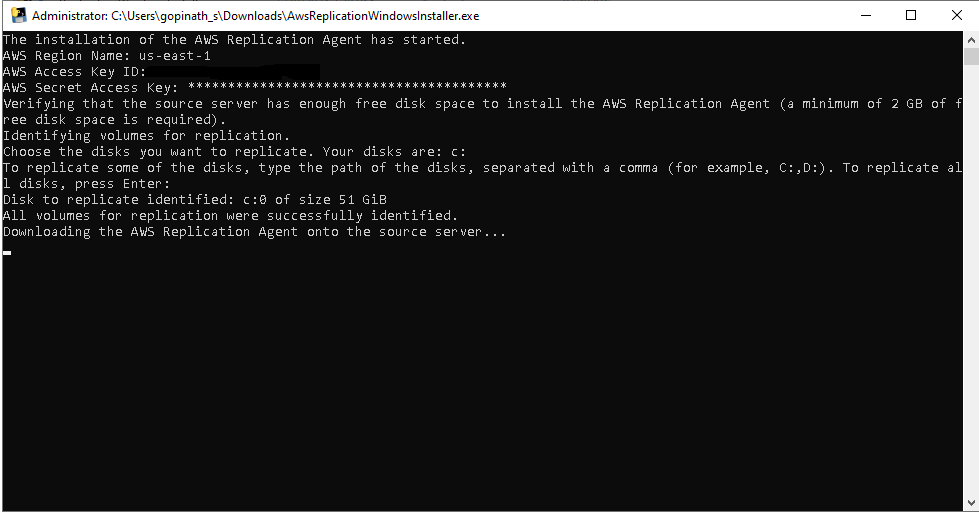

When installing you will be asked to choose the AWS region to replicate to. For this guide we chose N.V.

Step 3: Prepare the AWS Console

Create a User and Attach Permissions

In the AWS console create a new user and attach an AWS replication permission role to it. Generate access and secret keys for this user.

While creating keys choose the “third-party service” option for that key.

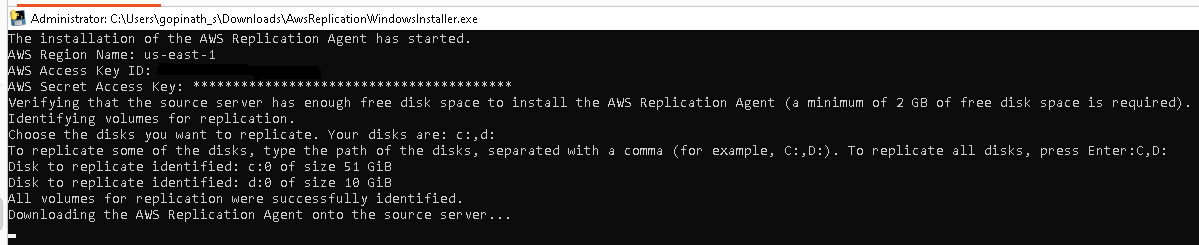

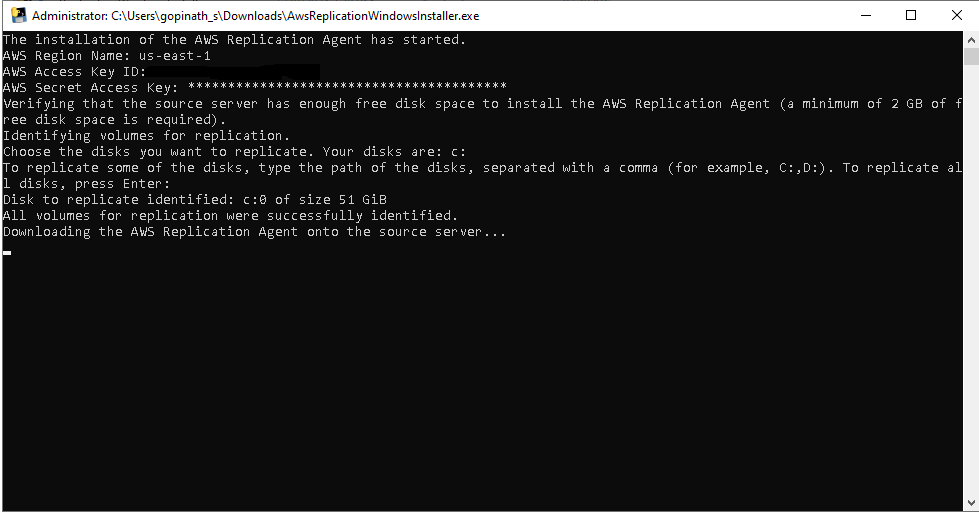

Enter the Keys into the GCP Windows Server

Enter the access key and secret key into the GCP Windows server. The AMS agent will ask which disks to replicate (e.g. C and D drives). For this example we just pressed enter to replicate all disks.

Once done the AMS agent will install and start replicating your data.

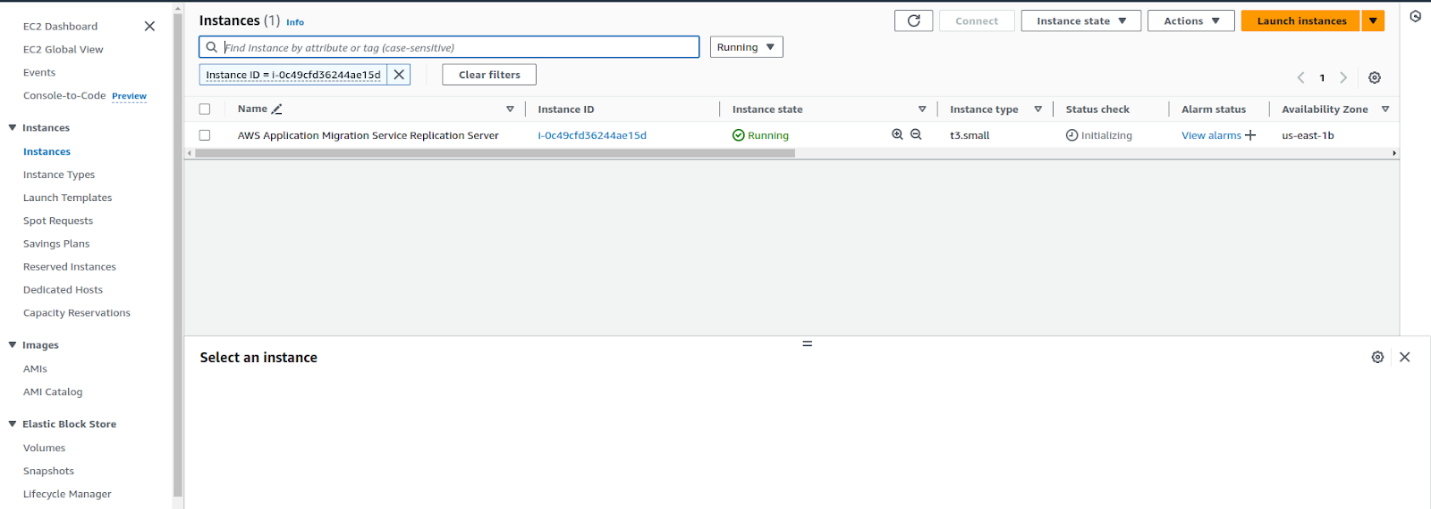

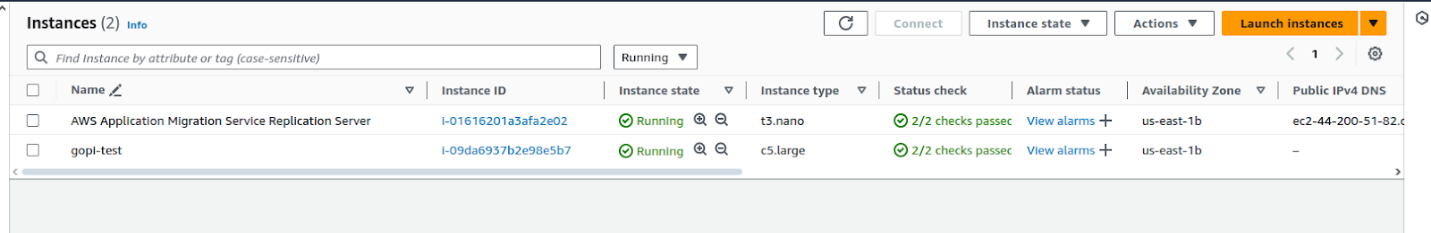

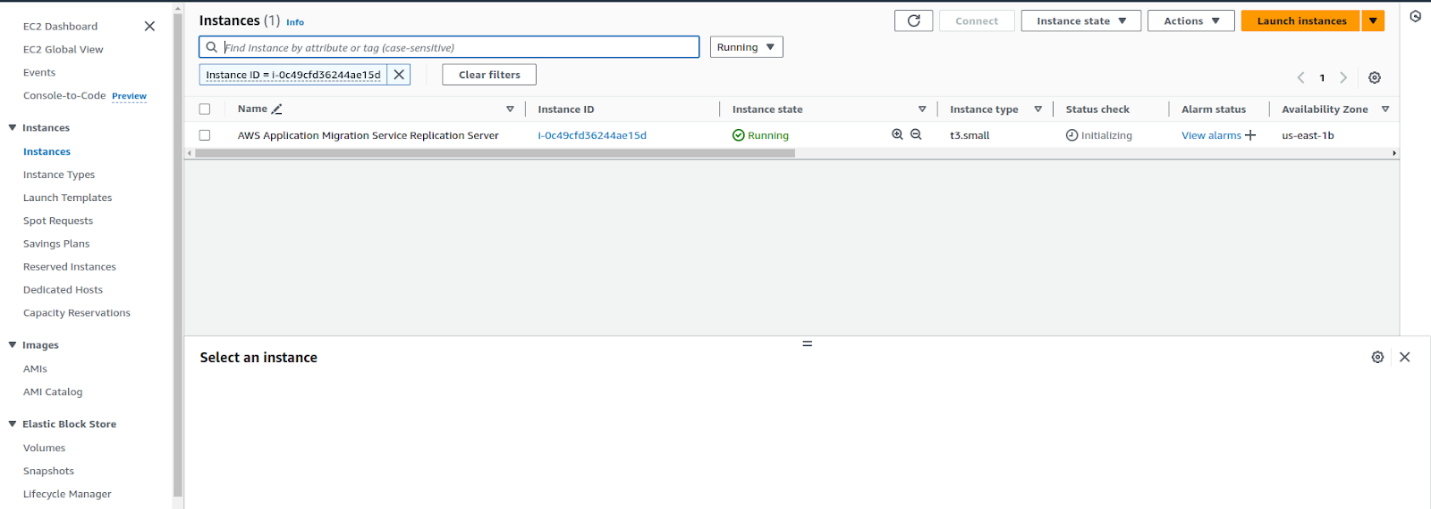

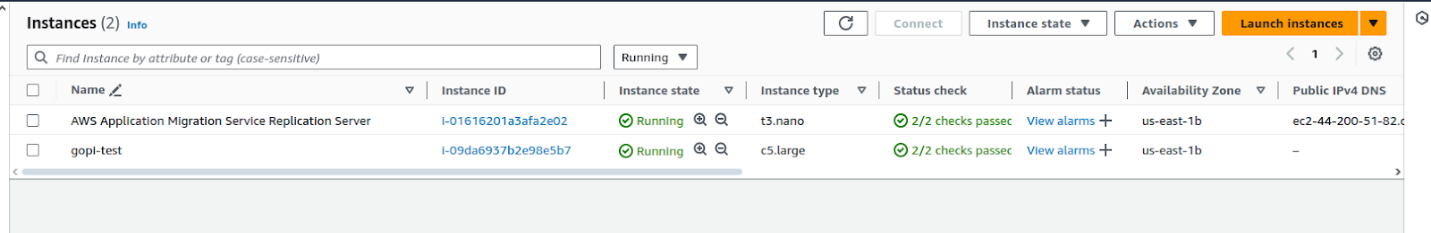

In our AWS account, one instance was created :

After installing the AMS agent on the source Windows server in GCP, a replication server was created in the AWS EC2 console. This instance was used to replicate all VM instance data from the GCP account to the AWS account.

Step 4: Monitor the Data Migration

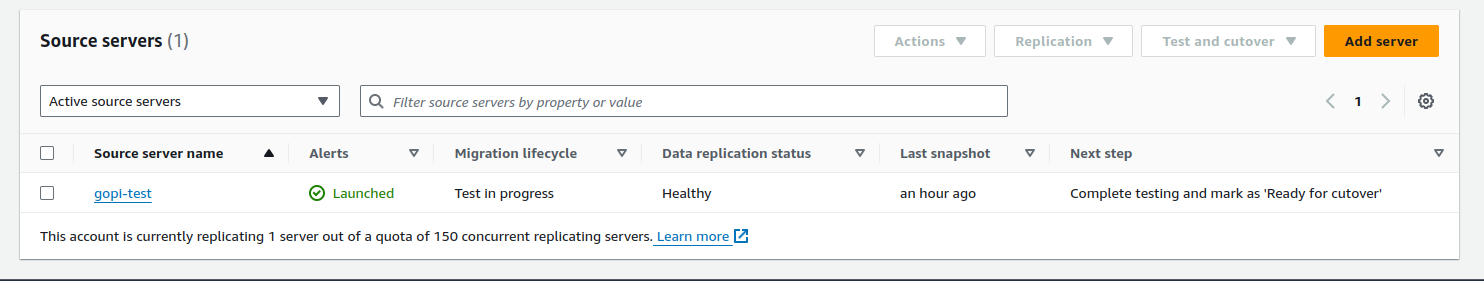

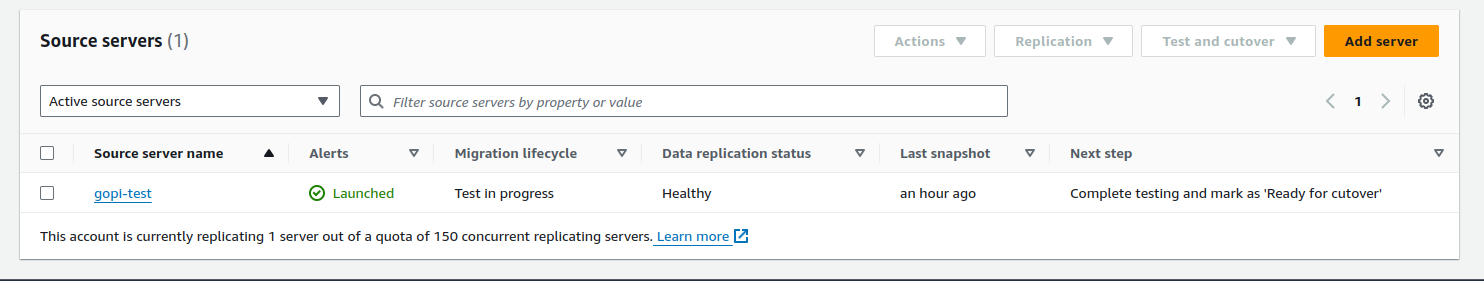

Go to the Application Migration Service in your AWS account. In the source servers column you should see your GCP VM instance listed.

The data migration will start and you can monitor it. Depending on the size of your boot disk and the amount of data this may take some time.

It took over half an hour to migrate the data from a 51 GB boot disk on a GCP VM instance to AWS. Once completed, it was ready for the testing stage.

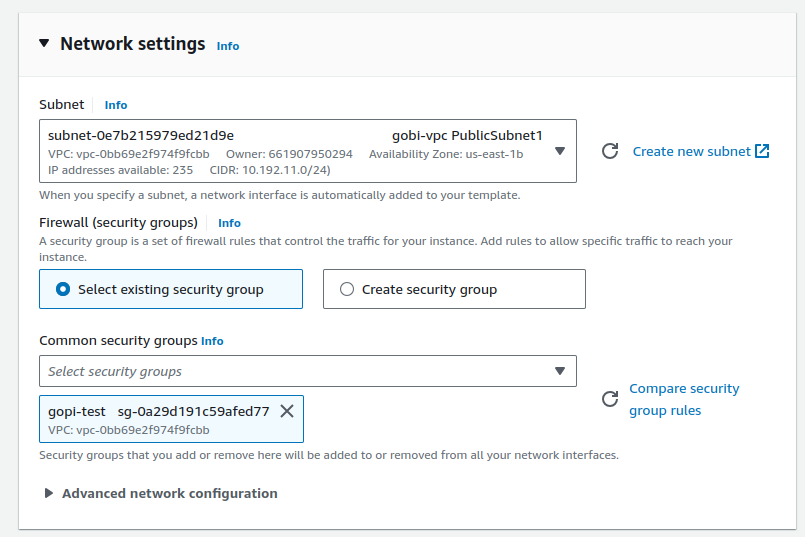

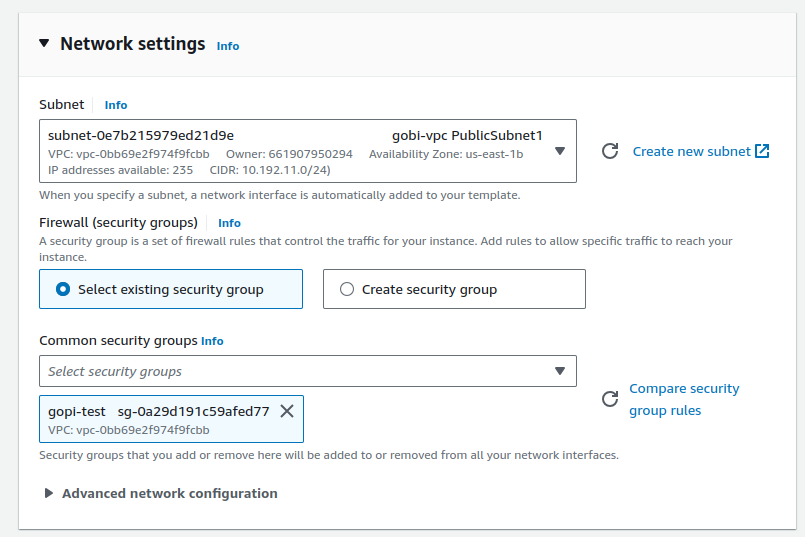

Step 5: Create a Launch Template

After the data migration is done, create a launch template for your use case. This launch template should include instance type, key pair, VPC range, subnets, etc. The new EC2 instance will be launched from this template.

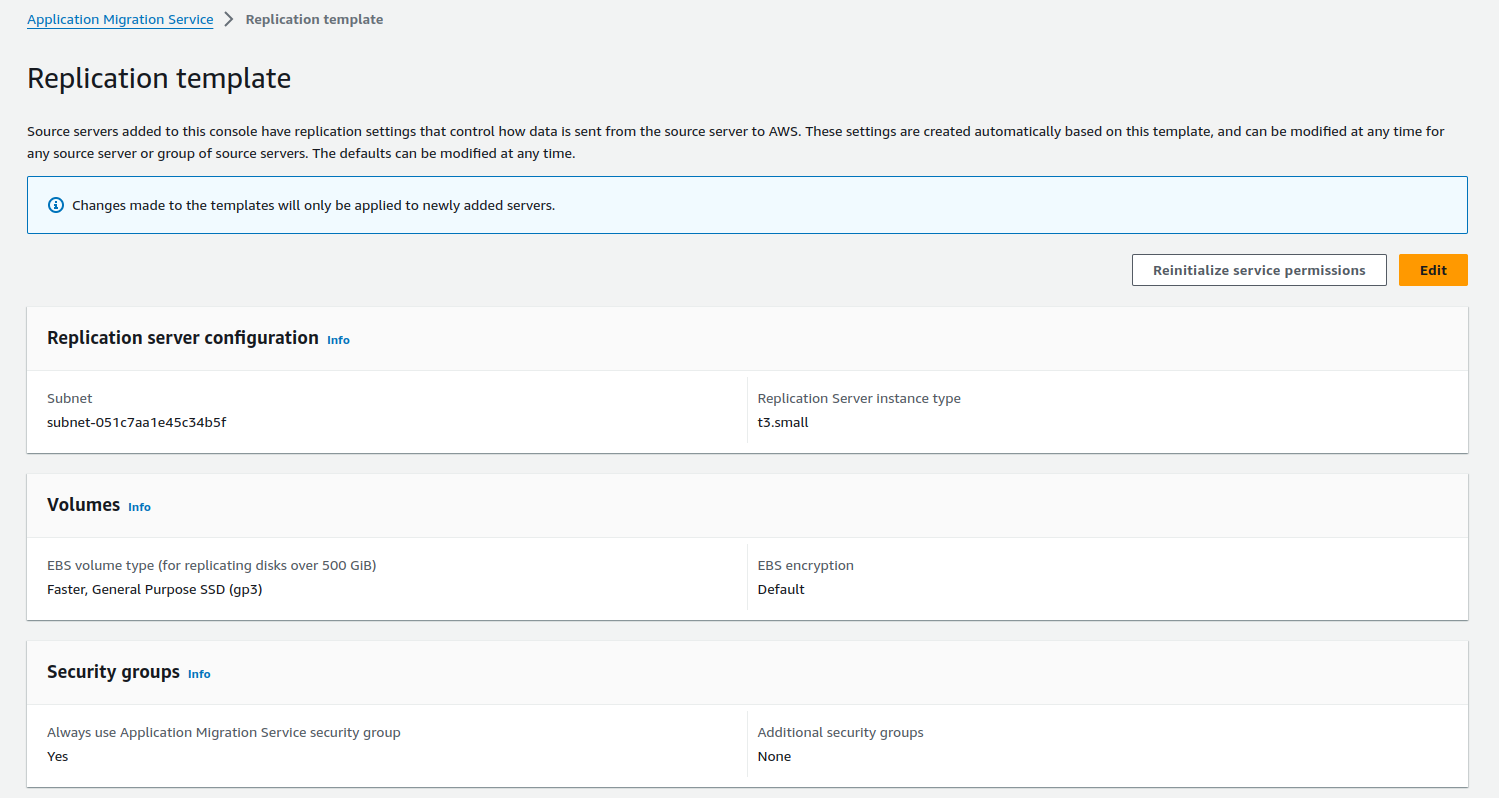

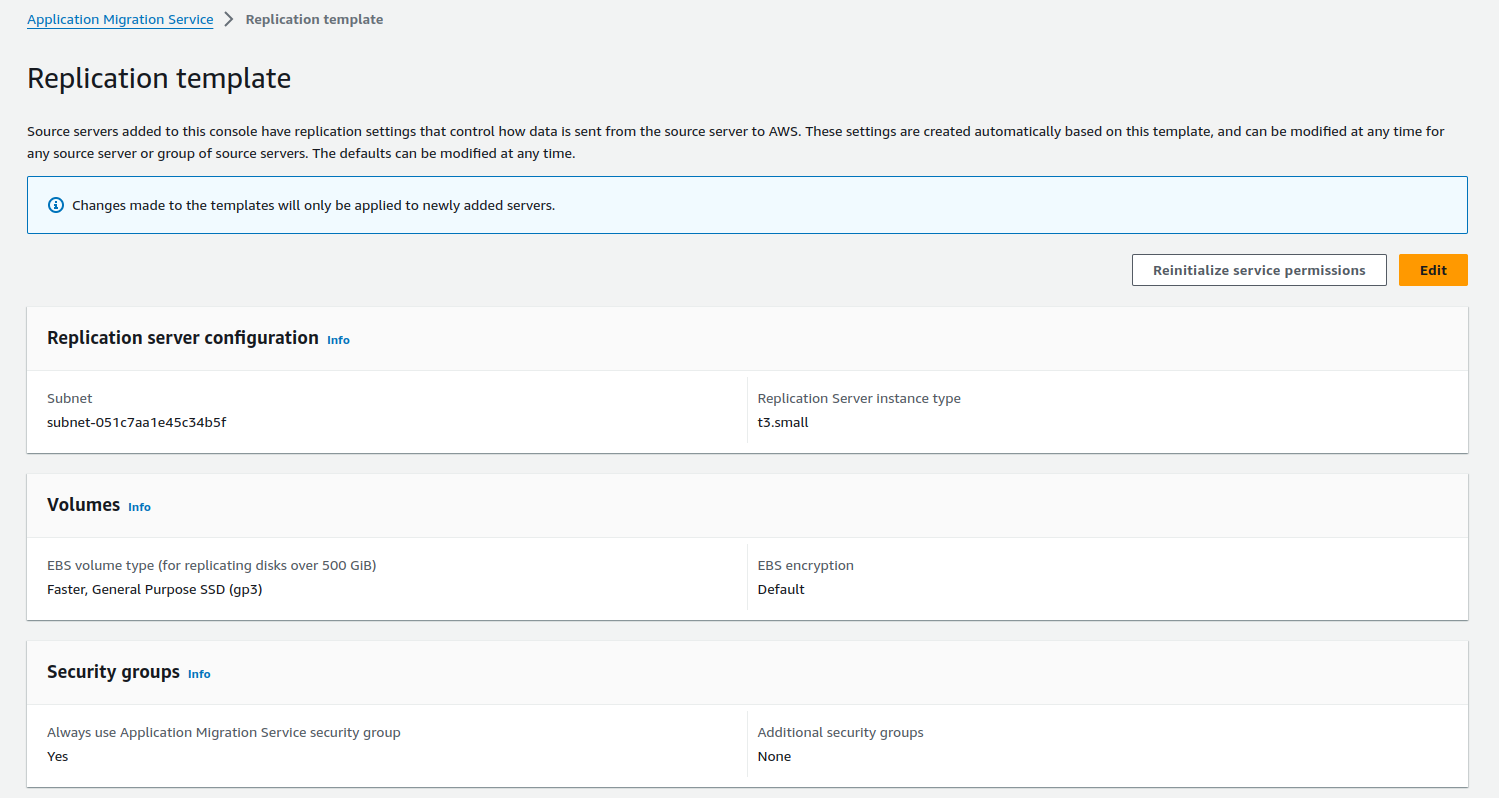

Step 6: Create a Replication Template

Similarly, create a replication template. This template will replicate your data to your new AWS environment.

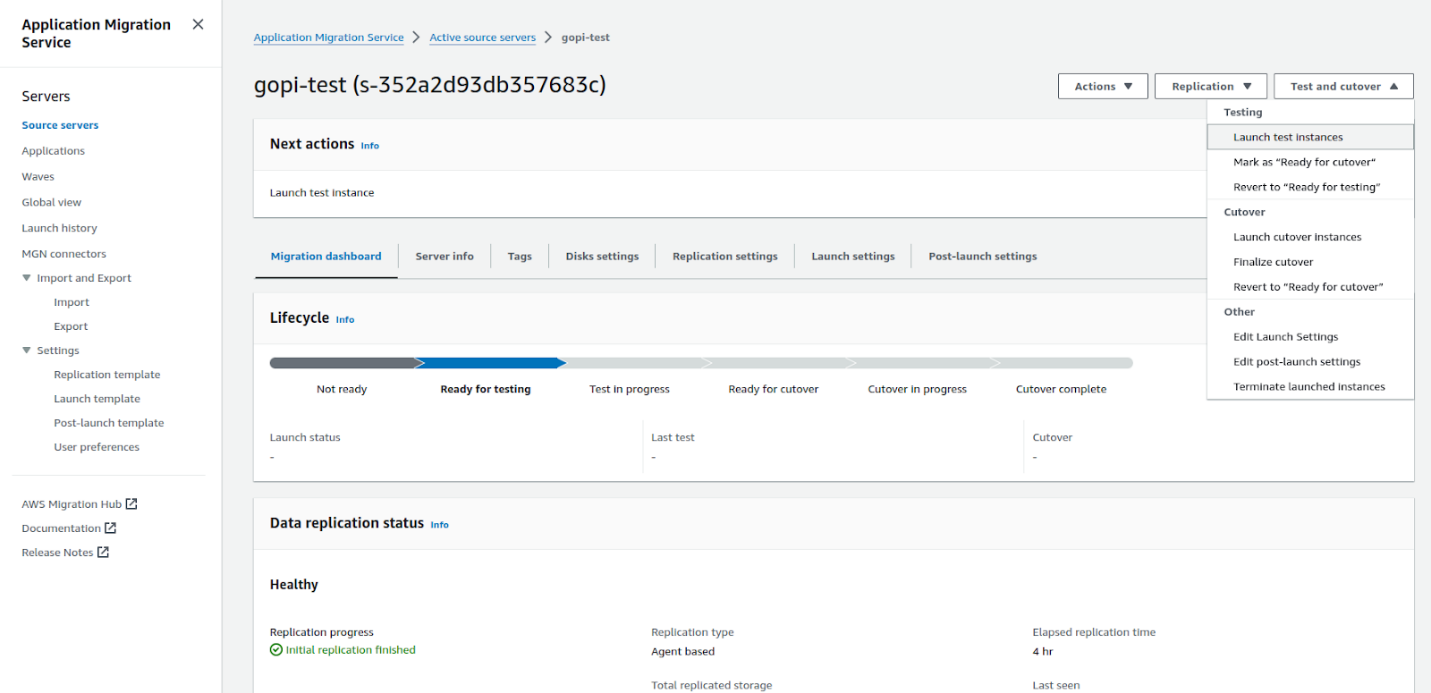

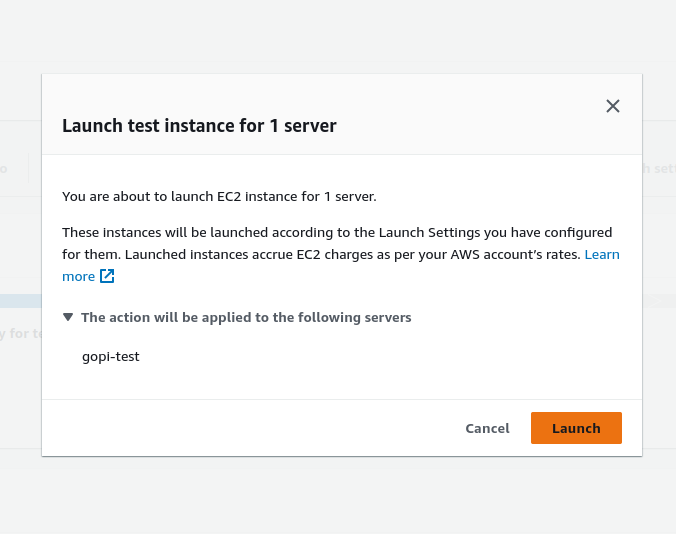

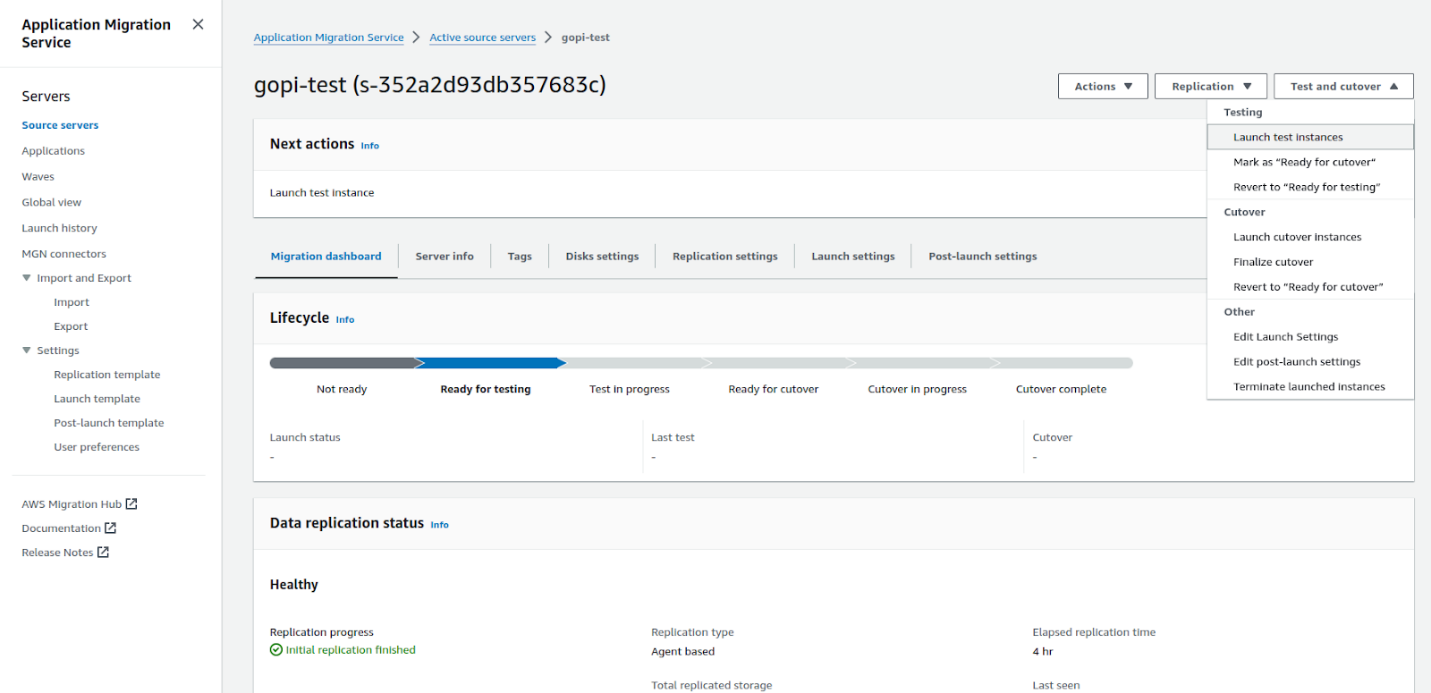

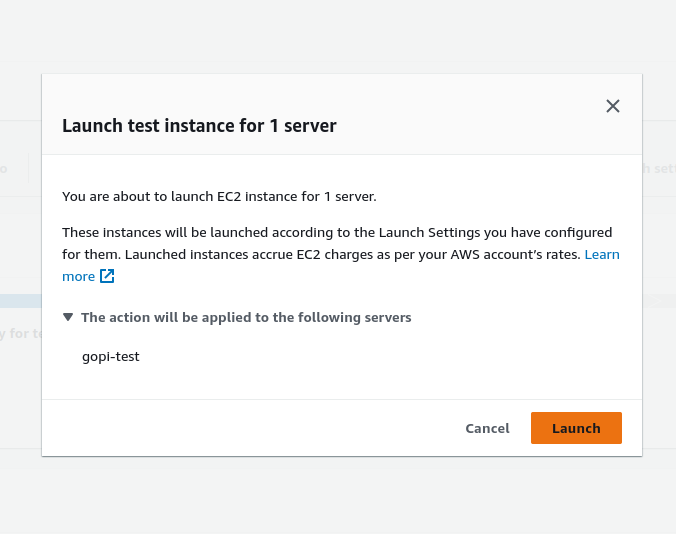

Step 7: Launch an EC2 Test Instance

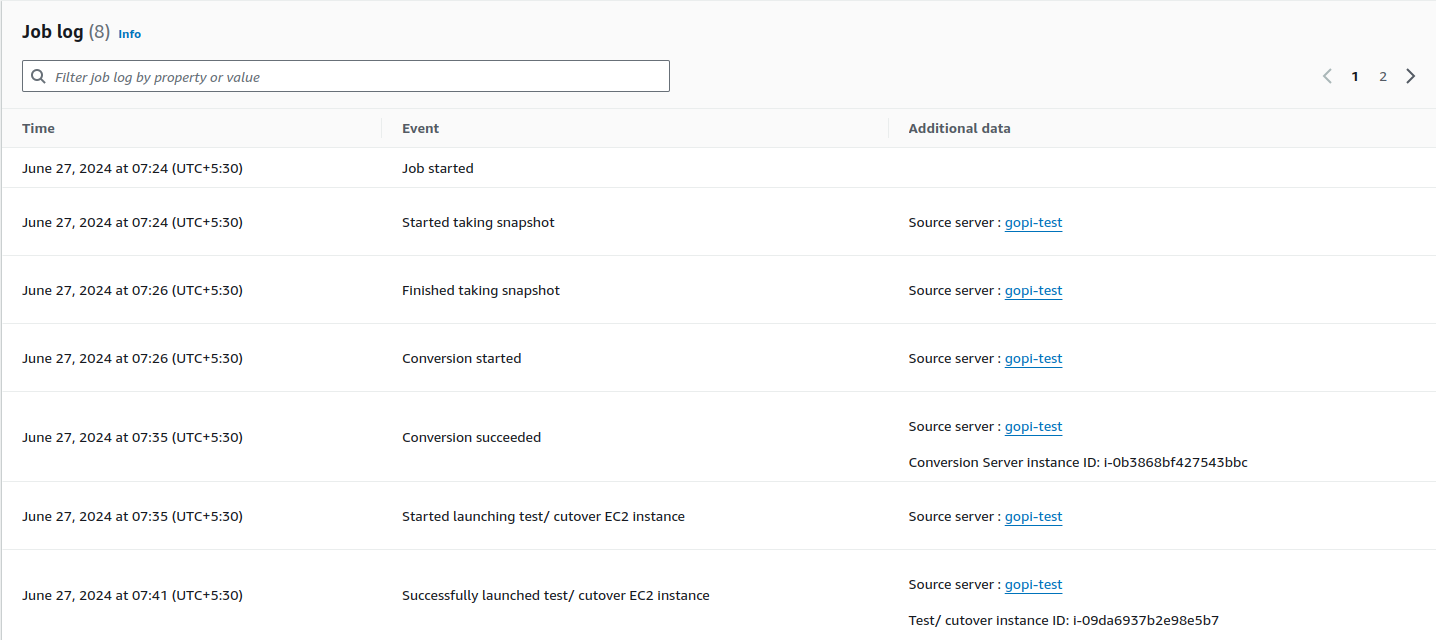

Once the templates are set up, launch an EC2 test instance from the boot disk of your source GCP VM instance. Take a snapshot of your instance to ensure data integrity. The test instance should launch successfully and match your original GCP VM. This is automated, no manual migration steps.

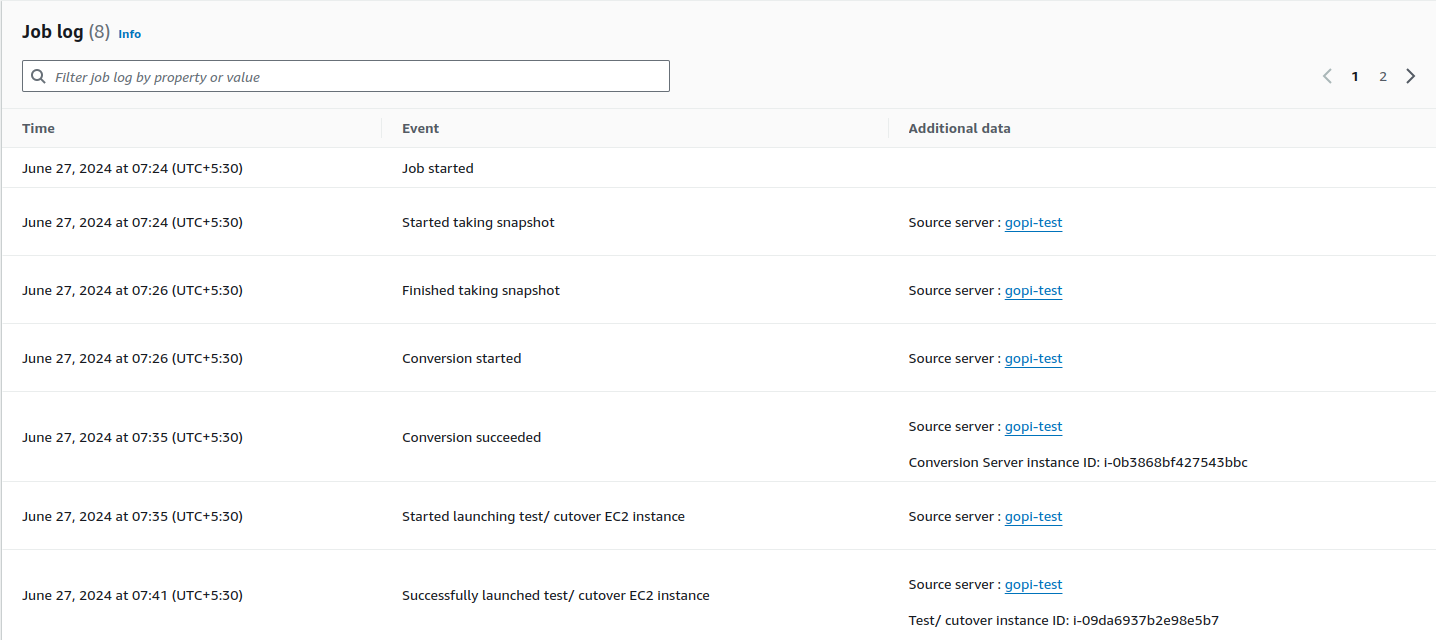

Once we launch a test EC2 instance, everything starts to happen automatically and the test EC2 instance is launched. Below is the automated process for launching the EC2 instance. See the screenshot.

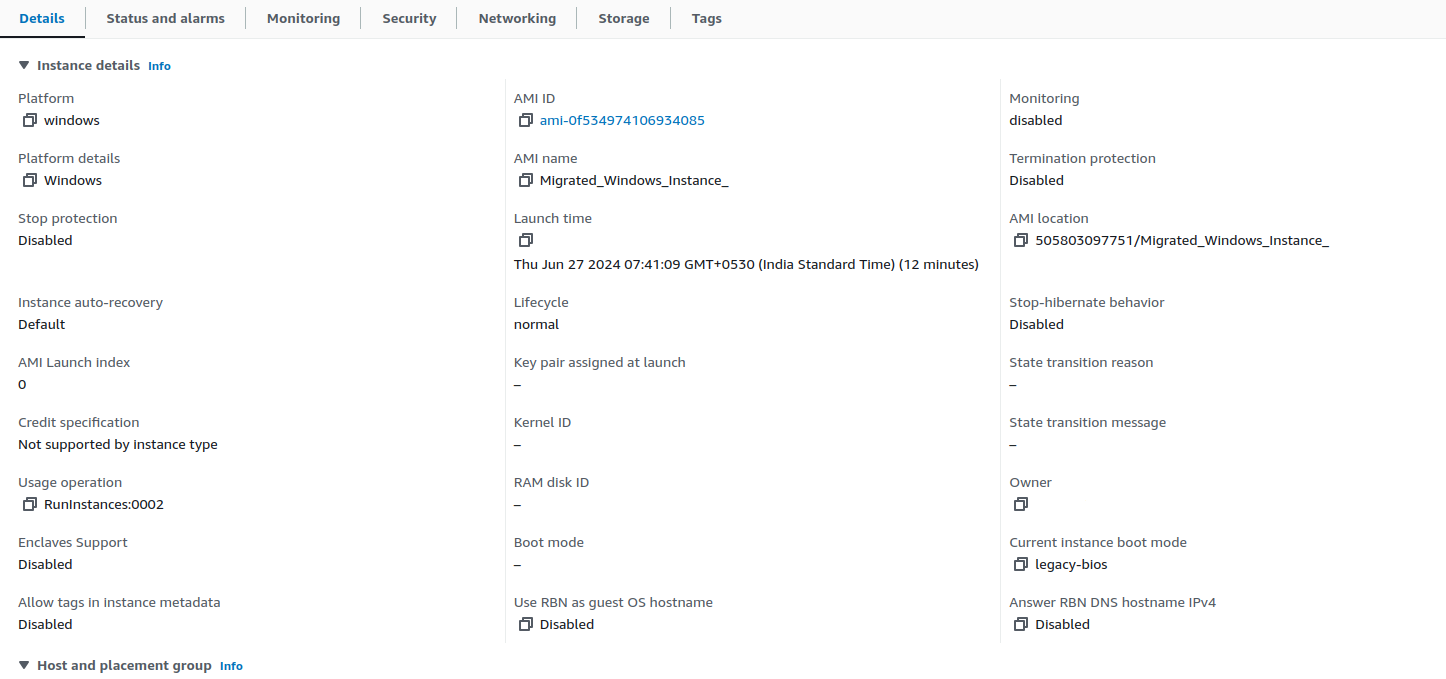

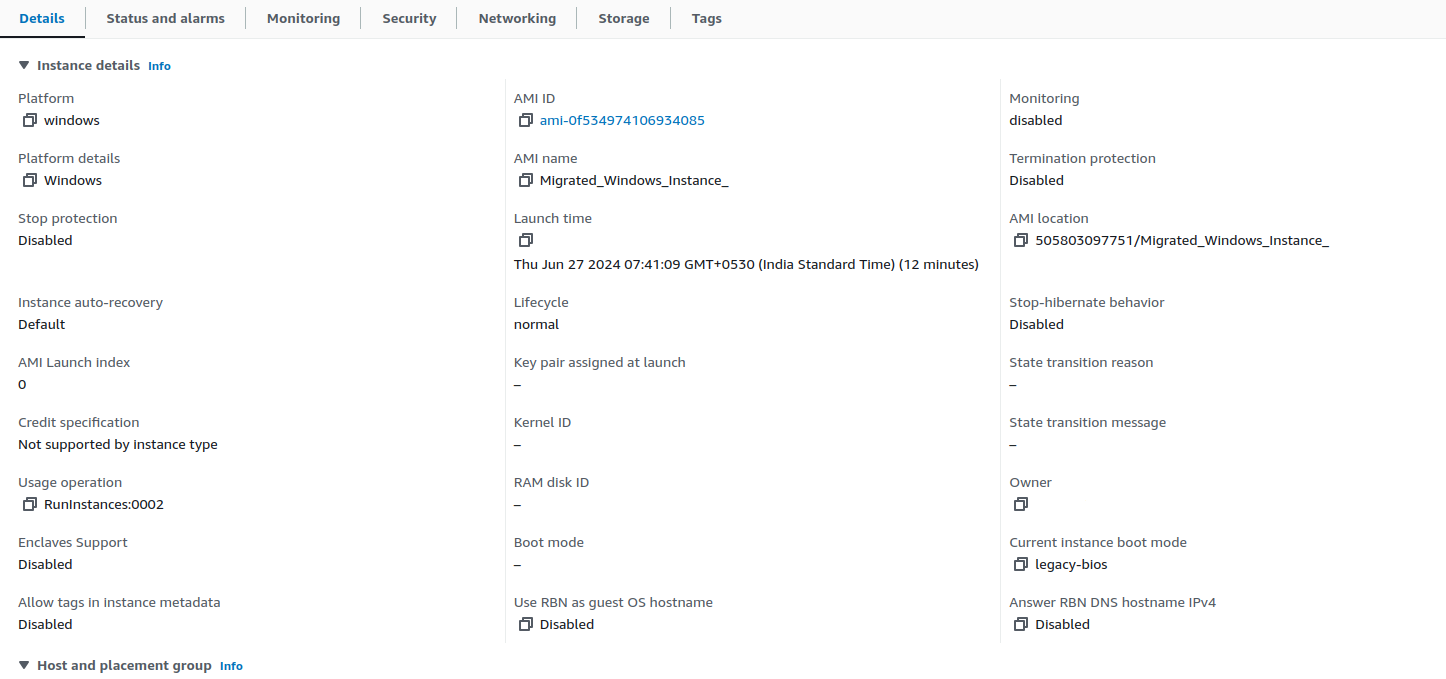

Once the above is done, data is migrated from GCP to AWS using AWS Application Migration Service replication server. You can see the test EC2 instance in the AWS EC2 console as shown below.

Test EC2 instance configuration for your reference:

Step 8: Final cut-over stage

Once the cutover is complete and a new EC2 instance is launched, the test EC2 instance and replication server are terminated and we are left with the new EC2 instance with our custom configuration. See the screenshot below.

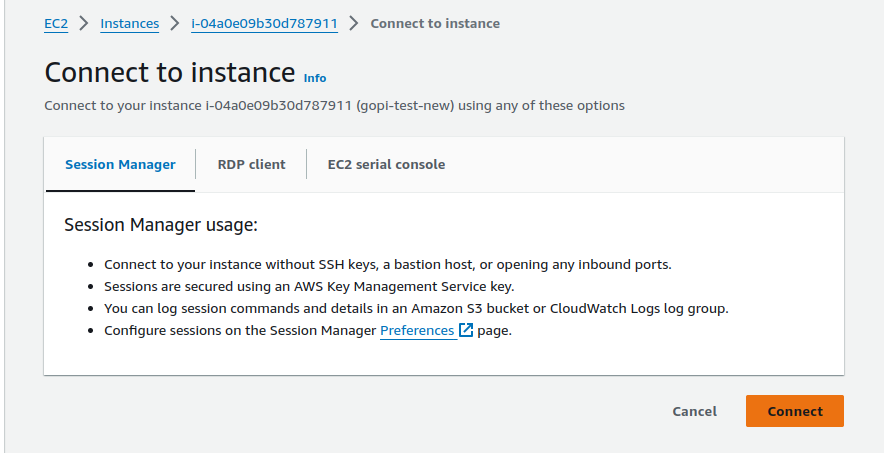

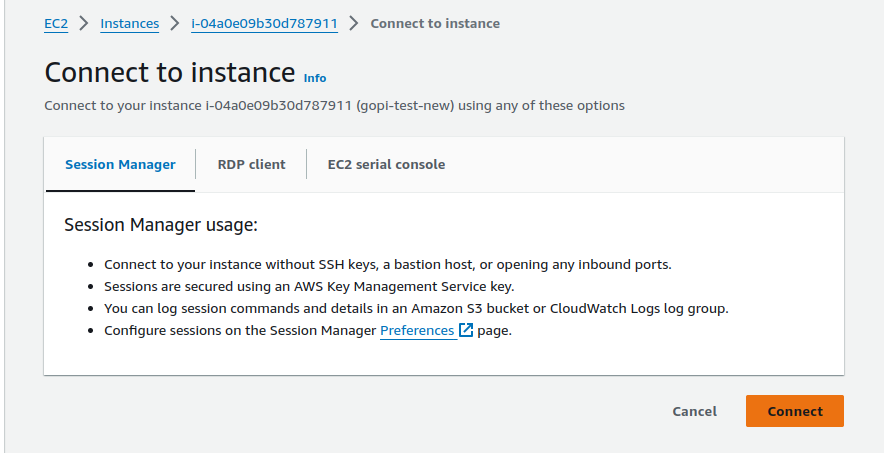

Step 9: Verify the EC2 Instance

Login to the new EC2 instance using RDP and verify all data is migrated. Verify all data is intact and accessible, check for any discrepancies. See our new EC2 instance below:

Step 10: Test Your Application

After verifying the data, test your application to see if it works as expected in the new AWS environment. We tested our sample web application and it worked.

Conclusion

Migrating a VM instance from GCP to AWS is a multi step process but with proper planning and execution it can be done smoothly. Follow this guide and your data will be migrated securely and your applications will run smoothly in the new environment.

2

.jpg)

AWS' Generative AI Strategy: Rapid Innovation and Comprehensive Solutions

Understanding Generative AI

Generative AI is a revolutionary branch of artificial intelligence that has the capability to create new content, whether it be conversations, stories, images, videos, or music. At its core, generative AI relies on machine learning models known as foundation models (FMs). These models are trained on extensive datasets and have the capacity to perform a wide range of tasks due to their large number of parameters. This makes them distinct from traditional machine learning models, which are typically designed for specific tasks such as sentiment analysis, image classification, or trend forecasting. Foundation models offer the flexibility to be adapted for various tasks without the need for extensive labeled data and training.

Key Factors Behind the Success of Foundation Models

There are three main reasons why foundation models have been so successful:

1. Transformer Architecture: The transformer architecture is a type of neural network that is not only efficient and scalable but also capable of modeling complex dependencies between input and output data. This architecture has been pivotal in the development of powerful generative AI models.

2. In-Context Learning: This innovative training paradigm allows pre-trained models to learn new tasks with minimal instruction or examples, bypassing the need for extensive labeled data. As a result, these models can be deployed quickly and effectively in a wide range of applications.

3. Emergent Behaviors at Scale: As models grow in size and are trained on larger datasets, they begin to exhibit new capabilities that were not present in smaller models. These emergent behaviors highlight the potential of foundation models to tackle increasingly complex tasks.

Accelerating Generative AI on AWS

AWS is committed to helping customers harness the power of generative AI by addressing four key considerations for building and deploying applications at scale:

1. Ease of Development: AWS provides tools and frameworks that simplify the process of building generative AI applications. This includes offering a variety of foundation models that can be tailored to specific use cases.

2. Data Differentiation: Customizing foundation models with your own data ensures that they are tailored to your organization's unique needs. AWS ensures that this customization happens in a secure and private environment, leveraging your data as a key differentiator.

3. Productivity Enhancement: AWS offers a suite of generative AI-powered applications and services designed to enhance employee productivity and streamline workflows.

4. Performance and Cost Efficiency: AWS provides a high-performance, cost-effective infrastructure specifically designed for machine learning and generative AI workloads. With over a decade of experience in creating purpose-built silicon, AWS delivers the optimal environment for running, building, and customizing foundation models.

AWS Tools and Services for Generative AI

To support your AI journey, AWS offers a range of tools and services:

1. Amazon Bedrock: Simplifies the process of building and scaling generative AI applications using foundation models.

2. AWS Trainium and AWS Inferentia: Purpose-built accelerators designed to enhance the performance of generative AI workloads.

3. AWS HealthScribe: A HIPAA-eligible service that generates clinical notes automatically.

4. Amazon SageMaker JumpStart: A machine learning hub offering foundation models, pre-built algorithms, and ML solutions that can be deployed with ease.

5. Generative BI Capabilities in Amazon QuickSight: Enables business users to extract insights, collaborate, and visualize data using FM-powered features.

6. Amazon CodeWhisperer: An AI coding companion that helps developers build applications faster and more securely.

By leveraging these tools and services, AWS empowers organizations to accelerate their AI initiatives and unlock the full potential of generative AI.

Some examples of how Ankercloud leverages AWS Gen AI solutions

- Ankercloud has leveraged Amazon Bedrock and Amazon SageMaker which powers VisionForge which is a tool to create designs tailored to user’s vision, democratizing creative modeling for everyone. VisionForge was used by our client ‘Arrivae’ a leading interior design organization, where we helped them with a 15% improvement in interior design image recommendations, aligning with user prompts and enhancing the quality of suggested designs. Additionally, the segmentation model's accuracy improvement to 65% allowed for a 10% better personalization of specific objects, significantly enhancing the user experience and satisfaction. Read more

- Another example of using Amazon SageMaker, Ankercloud worked with ‘Minalyze’ who are the world's leading manufacturer of XRF core scanning devices and software for geological data display. We were able to create a ready to use and preconfigured Amazon Sagemaker process for Image object classification and OCR analysis Models along with ML- Ops pipeline. This helped Increase the speed and accuracy of object classification and OCR which leads to increased operational efficiency. Read more

- Ankercloud has helped Federmeister, a facade building company, address their slow quote generation process by deploying an AI and ML solution leveraging Amazon SageMaker that automatically detects, classifies, and measures facade elements from uploaded images, cutting down the processing time from two weeks to just 8 hours. The system, trained on extensive datasets, achieves about 80% accuracy in identifying facade components. This significant upgrade not only reduced manual labor but also enhanced the company's ability to handle workload fluctuations, greatly improving operational efficiency and responsiveness. Read more

Ankercloud is an Advanced Tier AWS Service Partner, which enables us to harness the power of AWS's extensive cloud infrastructure and services to help businesses transform and scale their operations efficiently. Learn more here

2

.jpg)

Streamlining AWS Architecture Diagrams with Automated Title Insertion in draw.io

As a pre-sales engineer, creating detailed architecture diagrams is crucial to my role. These diagrams, particularly those showcasing AWS services, are essential for effectively communicating complex infrastructure setups to clients. However, manually adding titles to each AWS icon in draw.io is repetitive and time-consuming. Ensuring that every icon is correctly labeled becomes even more challenging under tight deadlines or frequent updates.

How This Tool Solved My Problem

To alleviate this issue, I discovered a Python script created by typex1 that automates the title insertion for AWS icons in draw.io diagrams. Here’s how this tool has transformed my workflow:

- Time Efficiency: The script automatically detects AWS icons in the draw.io file and inserts the official service names as titles. This eliminates the need for me to manually add titles, saving a significant amount of time, especially when working on large diagrams.

- Accuracy: By automating the title insertion, the script ensures that all AWS icons are consistently and correctly labeled. This reduces the risk of errors or omissions that could occur with manual entry.

- Seamless Integration: The script runs in the background, continuously monitoring the draw.io file for changes. Whenever a new AWS icon is added without a title, the script updates the file, and draw.io prompts for synchronization. This seamless integration means I can continue working on my diagrams without interruption.

- Focus on Core Tasks: With the repetitive task of title insertion automated, I can focus more on designing and refining the architecture of the diagrams. This allows me to deliver higher quality and more detailed diagrams to clients, enhancing the overall presentation and communication.

Conclusion

The automated title insertion tool has significantly improved my efficiency and accuracy when creating AWS architecture diagrams in draw.io. By automating a repetitive and error-prone task, I can now focus on the more critical aspects of diagram creation, ultimately delivering better client results.

Credits

Special thanks to typex1 for creating this incredibly useful tool. The efforts and contributions of the open-source community continue to enhance our productivity and streamline our workflows. If you encounter any issues or have suggestions for improvements, feel free to reach out or file an issue on the GitHub repository.

By leveraging automation, we can eliminate mundane tasks and concentrate on delivering value through our technical expertise. Happy diagramming!

2

Migrating a VM Instance from GCP to AWS A Step by Step Guide

Overview

Moving a virtual machine (VM) instance from Google Cloud Platform (GCP) to Amazon Web Services (AWS) can seem scary. But with the right tools and a step by step process it can be done. In this post we will walk you through the entire process and make the transition from GCP to AWS smooth. Here we are using AWS’s native tool, Application Migration Service, to move a VM instance from GCP to AWS.

Architecture Diagram

Step-by-Step Guide

Step 1: Setup on GCP

Launch a Test Windows VM Instance

Go to your GCP console and create a test Windows VM. We created a 51 GB boot disk for this example. This will be our source VM.

RDP into the Windows Server

Next RDP into your Windows server. Once connected you need to install the AWS Application Migration Service (AMS) agent on this server.

Install the AMS Agent

To install the AMS agent, download it using the following command:

For more details, refer to the AWS documentation: https://docs.aws.amazon.com/mgn/latest/ug/windows-agent.html

Step 2: Install the AMS Agent

Navigate to the Downloads folder and open the AWS agent with administrator privileges using the Command prompt.

When installing you will be asked to choose the AWS region to replicate to. For this guide we chose N.V.

Step 3: Prepare the AWS Console

Create a User and Attach Permissions

In the AWS console create a new user and attach an AWS replication permission role to it. Generate access and secret keys for this user.

While creating keys choose the “third-party service” option for that key.

Enter the Keys into the GCP Windows Server

Enter the access key and secret key into the GCP Windows server. The AMS agent will ask which disks to replicate (e.g. C and D drives). For this example we just pressed enter to replicate all disks.

Once done the AMS agent will install and start replicating your data.

In our AWS account, one instance was created :

After installing the AMS agent on the source Windows server in GCP, a replication server was created in the AWS EC2 console. This instance was used to replicate all VM instance data from the GCP account to the AWS account.

Step 4: Monitor the Data Migration

Go to the Application Migration Service in your AWS account. In the source servers column you should see your GCP VM instance listed.

The data migration will start and you can monitor it. Depending on the size of your boot disk and the amount of data this may take some time.

It took over half an hour to migrate the data from a 51 GB boot disk on a GCP VM instance to AWS. Once completed, it was ready for the testing stage.

Step 5: Create a Launch Template

After the data migration is done, create a launch template for your use case. This launch template should include instance type, key pair, VPC range, subnets, etc. The new EC2 instance will be launched from this template.

Step 6: Create a Replication Template

Similarly, create a replication template. This template will replicate your data to your new AWS environment.

Step 7: Launch an EC2 Test Instance

Once the templates are set up, launch an EC2 test instance from the boot disk of your source GCP VM instance. Take a snapshot of your instance to ensure data integrity. The test instance should launch successfully and match your original GCP VM. This is automated, no manual migration steps.

Once we launch a test EC2 instance, everything starts to happen automatically and the test EC2 instance is launched. Below is the automated process for launching the EC2 instance. See the screenshot.

Once the above is done, data is migrated from GCP to AWS using AWS Application Migration Service replication server. You can see the test EC2 instance in the AWS EC2 console as shown below.

Test EC2 instance configuration for your reference:

Step 8: Final cut-over stage

Once the cutover is complete and a new EC2 instance is launched, the test EC2 instance and replication server are terminated and we are left with the new EC2 instance with our custom configuration. See the screenshot below.

Step 9: Verify the EC2 Instance

Login to the new EC2 instance using RDP and verify all data is migrated. Verify all data is intact and accessible, check for any discrepancies. See our new EC2 instance below:

Step 10: Test Your Application

After verifying the data, test your application to see if it works as expected in the new AWS environment. We tested our sample web application and it worked.

Conclusion

Migrating a VM instance from GCP to AWS is a multi step process but with proper planning and execution it can be done smoothly. Follow this guide and your data will be migrated securely and your applications will run smoothly in the new environment.

AWS' Generative AI Strategy: Rapid Innovation and Comprehensive Solutions

Understanding Generative AI

Generative AI is a revolutionary branch of artificial intelligence that has the capability to create new content, whether it be conversations, stories, images, videos, or music. At its core, generative AI relies on machine learning models known as foundation models (FMs). These models are trained on extensive datasets and have the capacity to perform a wide range of tasks due to their large number of parameters. This makes them distinct from traditional machine learning models, which are typically designed for specific tasks such as sentiment analysis, image classification, or trend forecasting. Foundation models offer the flexibility to be adapted for various tasks without the need for extensive labeled data and training.

Key Factors Behind the Success of Foundation Models

There are three main reasons why foundation models have been so successful:

1. Transformer Architecture: The transformer architecture is a type of neural network that is not only efficient and scalable but also capable of modeling complex dependencies between input and output data. This architecture has been pivotal in the development of powerful generative AI models.

2. In-Context Learning: This innovative training paradigm allows pre-trained models to learn new tasks with minimal instruction or examples, bypassing the need for extensive labeled data. As a result, these models can be deployed quickly and effectively in a wide range of applications.

3. Emergent Behaviors at Scale: As models grow in size and are trained on larger datasets, they begin to exhibit new capabilities that were not present in smaller models. These emergent behaviors highlight the potential of foundation models to tackle increasingly complex tasks.

Accelerating Generative AI on AWS

AWS is committed to helping customers harness the power of generative AI by addressing four key considerations for building and deploying applications at scale:

1. Ease of Development: AWS provides tools and frameworks that simplify the process of building generative AI applications. This includes offering a variety of foundation models that can be tailored to specific use cases.

2. Data Differentiation: Customizing foundation models with your own data ensures that they are tailored to your organization's unique needs. AWS ensures that this customization happens in a secure and private environment, leveraging your data as a key differentiator.

3. Productivity Enhancement: AWS offers a suite of generative AI-powered applications and services designed to enhance employee productivity and streamline workflows.

4. Performance and Cost Efficiency: AWS provides a high-performance, cost-effective infrastructure specifically designed for machine learning and generative AI workloads. With over a decade of experience in creating purpose-built silicon, AWS delivers the optimal environment for running, building, and customizing foundation models.

AWS Tools and Services for Generative AI

To support your AI journey, AWS offers a range of tools and services:

1. Amazon Bedrock: Simplifies the process of building and scaling generative AI applications using foundation models.

2. AWS Trainium and AWS Inferentia: Purpose-built accelerators designed to enhance the performance of generative AI workloads.

3. AWS HealthScribe: A HIPAA-eligible service that generates clinical notes automatically.

4. Amazon SageMaker JumpStart: A machine learning hub offering foundation models, pre-built algorithms, and ML solutions that can be deployed with ease.

5. Generative BI Capabilities in Amazon QuickSight: Enables business users to extract insights, collaborate, and visualize data using FM-powered features.

6. Amazon CodeWhisperer: An AI coding companion that helps developers build applications faster and more securely.

By leveraging these tools and services, AWS empowers organizations to accelerate their AI initiatives and unlock the full potential of generative AI.

Some examples of how Ankercloud leverages AWS Gen AI solutions

- Ankercloud has leveraged Amazon Bedrock and Amazon SageMaker which powers VisionForge which is a tool to create designs tailored to user’s vision, democratizing creative modeling for everyone. VisionForge was used by our client ‘Arrivae’ a leading interior design organization, where we helped them with a 15% improvement in interior design image recommendations, aligning with user prompts and enhancing the quality of suggested designs. Additionally, the segmentation model's accuracy improvement to 65% allowed for a 10% better personalization of specific objects, significantly enhancing the user experience and satisfaction. Read more

- Another example of using Amazon SageMaker, Ankercloud worked with ‘Minalyze’ who are the world's leading manufacturer of XRF core scanning devices and software for geological data display. We were able to create a ready to use and preconfigured Amazon Sagemaker process for Image object classification and OCR analysis Models along with ML- Ops pipeline. This helped Increase the speed and accuracy of object classification and OCR which leads to increased operational efficiency. Read more

- Ankercloud has helped Federmeister, a facade building company, address their slow quote generation process by deploying an AI and ML solution leveraging Amazon SageMaker that automatically detects, classifies, and measures facade elements from uploaded images, cutting down the processing time from two weeks to just 8 hours. The system, trained on extensive datasets, achieves about 80% accuracy in identifying facade components. This significant upgrade not only reduced manual labor but also enhanced the company's ability to handle workload fluctuations, greatly improving operational efficiency and responsiveness. Read more

Ankercloud is an Advanced Tier AWS Service Partner, which enables us to harness the power of AWS's extensive cloud infrastructure and services to help businesses transform and scale their operations efficiently. Learn more here

Streamlining AWS Architecture Diagrams with Automated Title Insertion in draw.io

As a pre-sales engineer, creating detailed architecture diagrams is crucial to my role. These diagrams, particularly those showcasing AWS services, are essential for effectively communicating complex infrastructure setups to clients. However, manually adding titles to each AWS icon in draw.io is repetitive and time-consuming. Ensuring that every icon is correctly labeled becomes even more challenging under tight deadlines or frequent updates.

How This Tool Solved My Problem

To alleviate this issue, I discovered a Python script created by typex1 that automates the title insertion for AWS icons in draw.io diagrams. Here’s how this tool has transformed my workflow:

- Time Efficiency: The script automatically detects AWS icons in the draw.io file and inserts the official service names as titles. This eliminates the need for me to manually add titles, saving a significant amount of time, especially when working on large diagrams.

- Accuracy: By automating the title insertion, the script ensures that all AWS icons are consistently and correctly labeled. This reduces the risk of errors or omissions that could occur with manual entry.

- Seamless Integration: The script runs in the background, continuously monitoring the draw.io file for changes. Whenever a new AWS icon is added without a title, the script updates the file, and draw.io prompts for synchronization. This seamless integration means I can continue working on my diagrams without interruption.

- Focus on Core Tasks: With the repetitive task of title insertion automated, I can focus more on designing and refining the architecture of the diagrams. This allows me to deliver higher quality and more detailed diagrams to clients, enhancing the overall presentation and communication.

Conclusion

The automated title insertion tool has significantly improved my efficiency and accuracy when creating AWS architecture diagrams in draw.io. By automating a repetitive and error-prone task, I can now focus on the more critical aspects of diagram creation, ultimately delivering better client results.

Credits

Special thanks to typex1 for creating this incredibly useful tool. The efforts and contributions of the open-source community continue to enhance our productivity and streamline our workflows. If you encounter any issues or have suggestions for improvements, feel free to reach out or file an issue on the GitHub repository.

By leveraging automation, we can eliminate mundane tasks and concentrate on delivering value through our technical expertise. Happy diagramming!

FAQs

Some benefits of using cloud computing services include cost savings, scalability, flexibility, reliability, and increased collaboration.

Ankercloud takes data privacy and compliance seriously and adheres to industry best practices and standards to protect customer data. This includes implementing strong encryption, access controls, regular security audits, and compliance certifications such as ISO 27001, GDPR, and HIPAA, depending on the specific requirements of the customer. Learn More

The main types of cloud computing models are Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). Each offers different levels of control and management for users.

Public clouds are owned and operated by third-party providers, private clouds are dedicated to a single organization, and hybrid clouds combine elements of both public and private clouds. The choice depends on factors like security requirements, scalability needs, and budget constraints.

Cloud computing services typically offer pay-as-you-go or subscription-based pricing models, where users only pay for the resources they consume. Prices may vary based on factors like usage, storage, data transfer, and additional features.

The process of migrating applications to the cloud depends on various factors, including the complexity of the application, the chosen cloud provider, and the desired deployment model. It typically involves assessing your current environment, selecting the appropriate cloud services, planning the migration strategy, testing and validating the migration, and finally, executing the migration with minimal downtime.

Ankercloud provides various levels of support to its customers, including technical support, account management, training, and documentation. Customers can access support through various channels such as email, phone, chat, and a self-service knowledge base.

The Ankercloud Team loves to listen

.jpg)

.PNG)