From project-specific support to managed services, we help you accelerate time to market, maximise cost savings and realize your growth ambitions

Industry-Specific Cloud Solutions

Revolutionizing Mobility with Tailored Cloud Solutions

Revolutionizing Healthcare with Tailored Cloud Solutions

Revolutionizing Life Sciencewith Tailored Cloud Solutions

Revolutionizing Manufacturing with Tailored Cloud Solutions

Revolutionizing Financial Services with Tailored Cloud Solutions

Revolutionizing Process Automation with Tailored Cloud Solutions

Revolutionizing ISV / Technology with Tailored Cloud Solutions

Revolutionizing Public Sector/Non-Profit with Tailored Cloud Solutions

Countless Happy Clients and Counting!

.png)

.png)

Awards and Recognition

Our Latest Achievement

.svg)

.svg)

Ankercloud: Partners with AWS, GCP, and Azure

We excel through partnerships with industry giants like AWS, GCP, and Azure, offering innovative solutions backed by leading cloud technologies.

Check out our blog

Ethical Governance and Regulatory Compliance Solutions for Agentic AI

The Governance Imperative: Building Trust in Autonomous AI

The power of Agentic AI autonomous systems that make decisions and execute complex workflows comes with profound responsibility. For enterprises, deploying multi-agent systems requires a governance framework that moves beyond traditional model monitoring. It demands assurance that every autonomous action is transparent, fair, and legally compliant.

At Ankercloud, we provide comprehensive Agentic AI solutions designed to ensure ethical governance and regulatory compliance across these autonomous systems. Our framework integrates human oversight, intelligent monitoring, and policy-driven automation, empowering organizations to deploy AI responsibly while maintaining transparency, fairness, and accountability at every stage of the agentic workflow.

1. Ethical Governance Framework: Intelligence Embedded

Our governance model doesn't just review decisions; it embeds ethical intelligence directly into the fabric of agent workflows. This ensures that responsible principles are non-negotiable components of every autonomous action.

- Transparency & Explainability: Each agent records its decision logic and context, creating a clear audit trail. This ensures that every outcome, whether automated or escalated, is fully auditable for human review.

- Accountability: Supervisory agents monitor actions and escalate decisions requiring human validation. This creates a clear line of shared responsibility between the human team and the AI system.

- Fairness & Bias Mitigation: We implement continuous fairness assessments to detect and correct biases through automated bias-reporting agents and regular retraining cycles, ensuring equitable outcomes.

- Privacy Protection: Robust data governance policies enforce anonymization, secure transmission, and the principle of minimal data exposure during all inter-agent communication.

2. Regulatory Compliance Management: Global Legal Alignment

Navigating the fragmented global landscape of AI regulation is complex. Our solution simplifies this by building compliance into the core architecture, making your AI operations future-ready.

- Global Legal Alignment: Our Compliance agents ensure adherence to critical international standards, including GDPR, the EU AI Act, HIPAA, and ethical guidelines like those from the IEEE.

- Automated Policy Enforcement: Intelligent rule engines validate compliance in real time during key stages: data ingestion, model training, deployment, and post-production monitoring.

- Comprehensive Auditability: We maintain version-controlled logs and digital compliance dashboards, providing full visibility into model updates, decision outcomes, and risk assessments necessary for external audits.

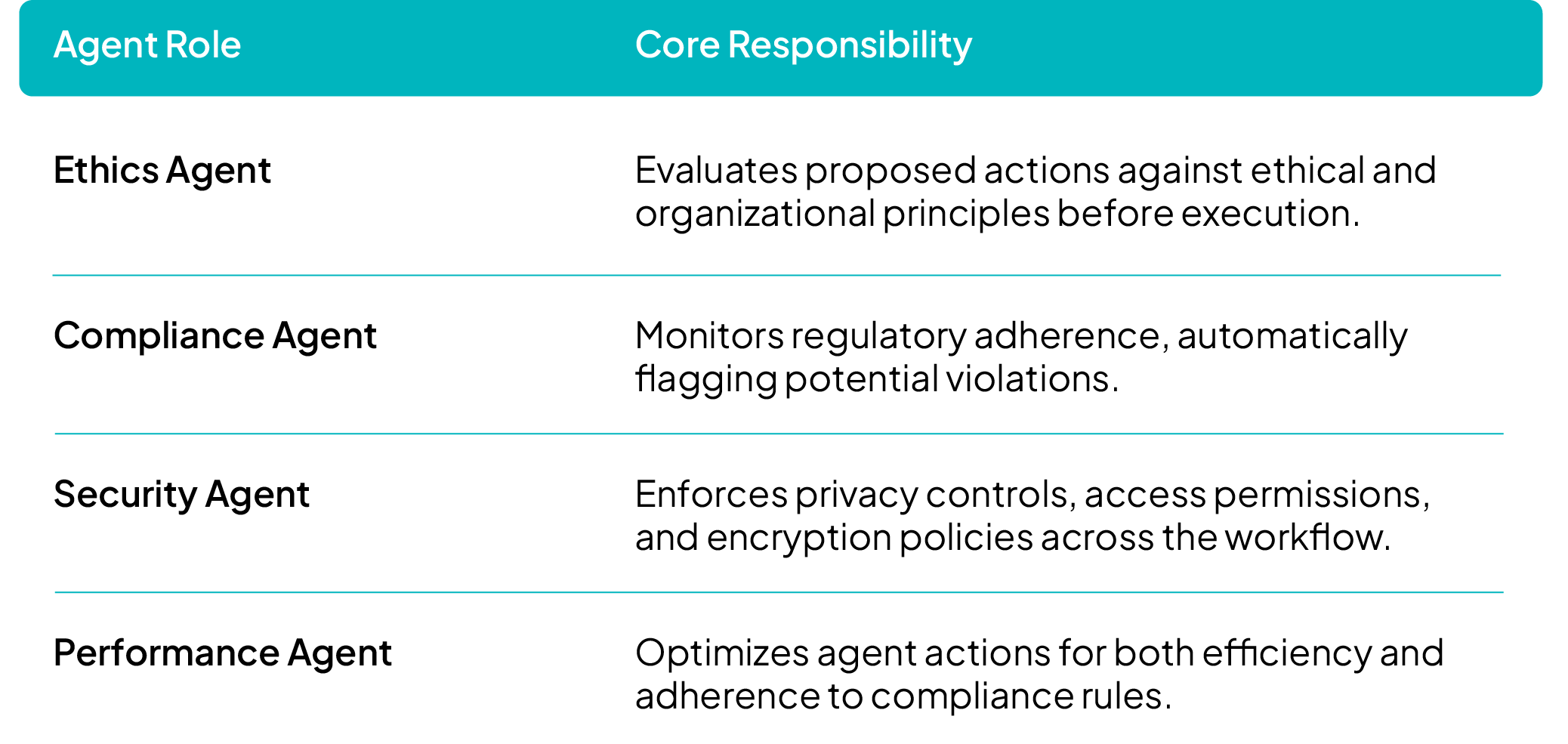

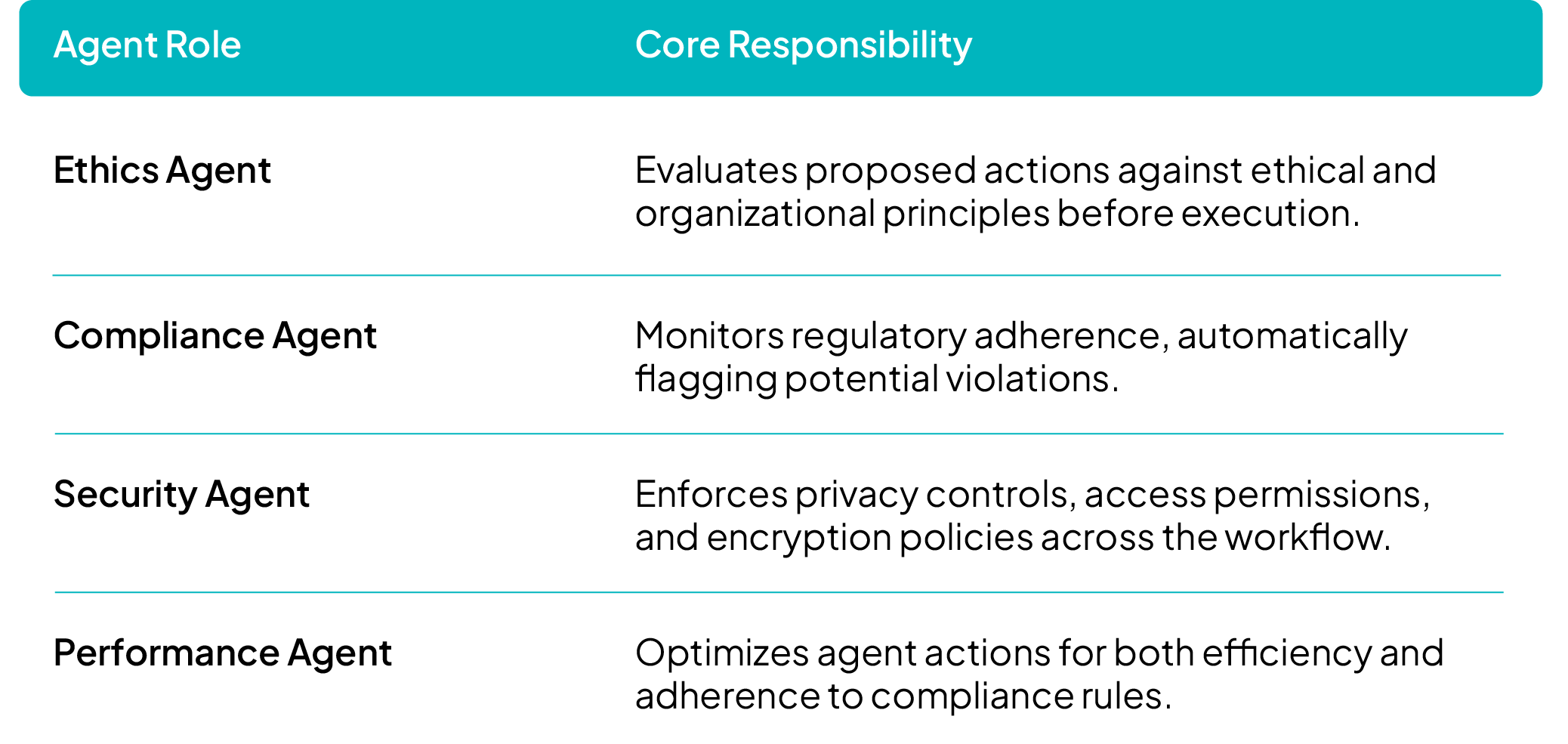

3. Agentic Workflow Architecture: Governance as a Service

In our multi-agent ecosystem, governance is integrated directly into task execution through specialized, collaborative agents.

This architecture utilizes parallel processing to simultaneously evaluate ethics, compliance, and performance, ensuring that ethical clearance is a prerequisite for any task deployment.

4. Continuous Oversight & Improvement

To sustain compliance and ethical integrity in a constantly evolving environment, our systems feature ongoing monitoring and dynamic policy adaptation:

- Feedback Loops: Agents continuously self-assess and report ethical or regulatory anomalies for immediate review.

- Human-in-the-Loop Governance: Critical actions, high-impact decisions, and flagged cases are escalated to human reviewers, ensuring human judgment is applied where necessary.

- Adaptive Compliance Learning: Our systems are designed to evolve with new laws, standards, and enterprise governance frameworks through automated policy updates and model retraining.

Key Benefits

By partnering with Ankercloud, you gain:

- End-to-end ethical and regulatory assurance across multi-agent environments.

- Enhanced trust, transparency, and accountability in AI-driven decision-making.

- Real-time compliance validation through automated governance agents.

- A scalable, future-ready governance model adaptable to changing global AI regulations.

Ready to deploy autonomous AI with confidence and integrity?

Partner with Ankercloud to secure the ethical future of your Agentic AI systems.

2

Agentic AI Use Cases: Transforming Core Business Workflows for RealWorld ROI

The Shift: From Experimentation to Execution

Ready to ditch the manual bottlenecks and elevate your business with intelligent automation?

The next wave of AI isn't about isolated tasks, it's about orchestrating entire workflows. Dive into our latest use cases to see how Agentic AI is driving 90% reduction in manual data entry, cutting quote to execution time by 70%, and transforming product creation with Vision + GenAI Fusion. This is how you achieve real world ROI, not just potential.

The breakthrough lies in moving beyond simple chatbots and static machine learning models to deploy autonomous systems that solve complex, multi step business problems end to end. At Ankercloud, we are engineering these MultiAgent Workflows to drive profound efficiencies across the enterprise.

1. Intelligent Order Entry & Fulfilment Automation

Enterprises frequently lose time and accuracy translating customer purchase orders (POs) across various channels (email, PDF, voice) into their core ERP systems. Agentic AI automates this entire order lifecycle, from customer inquiry to final dispatch.

Solution: A MultiAgent Order Orchestration System

.png)

Outcome

90% reduction in manual data entry

End to end order processing automation

Integration ready with systems like SAP, Salesforce, and custom CRMs.

2. EnquirytoExecution Workflow (E2E Intelligent Process Automation)

The time lost between receiving a customer inquiry and beginning the final deliverable (e.g., quote -> approval -> project start) creates unnecessary human bottlenecks. Agentic AI now automates this "middle office" process.

Solution: A MultiAgent Process Automation System

%20%20(1).png)

Outcome

Cuts enquiry to execution time by up to 70%.

No human bottlenecks in approvals.

Ensures consistent quote and contract templates via GenAI.

3. Image Generation & 3D Model Conversion (Vision + GenAI Fusion)

Industries like jewelry, textiles, e-commerce, and architecture require fast, high quality asset creation (e.g., product photography -> 3D render -> virtual showroom). Agentic AI fuses Vision and Generative models to automate this content pipeline.

Solution: An AI Imageto3D Conversion Suite

%20%20(1).png)

Outcome

10$\times$ faster product modeling.

80% cost reduction in 3D asset creation.

Assets are metaverse / AR commerce ready.

4. Autonomous Quotation & Pricing Engine

Sales teams need dynamic quoting that reacts instantly to live market inputs (demand, margin, competition) but often get slowed down by manual approval loops. Agentic AI generates, optimizes, and approves quotes autonomously.

Solution: A MultiAgent Quoting System

.png)

Outcome

Enables real time dynamic pricing.

Accelerates sales closure and improves margin accuracy.

Reduced approval loops and human bottlenecks.

The Future of Work is Autonomous

These use cases demonstrate that Agentic AI is not just about isolated tasks; it’s about holistic workflow automation. By deploying multi agent systems, Ankercloud helps enterprises eliminate manual friction, reduce operational costs, and unlock unprecedented speed.

Ready to identify your first high ROI Agentic AI use case?

Partner with Ankercloud to transform your core business processes.

2

Your Trusted Ally in Intelligent Automation: Agentic AI

The Shift: From Reactive AI to Proactive Autonomy

For years, Artificial Intelligence has been a powerful tool, but one that required constant human direction. You built the model, but your team still had to manually prepare data, trigger processes, and interpret every output. This fragmentation created bottlenecks and limited scalability.

The new era of Agentic AI changes everything.

Agentic AI systems are designed not just to analyze, but to act autonomously. They orchestrate complex, multi-step workflows end-to-end, making decisions, collaborating with other systems, and continually learning to achieve a high-level goal—all without constant human oversight.

This shift transforms AI from a back-office tool into a proactive Business Ally that drives measurable results and unparalleled efficiency.

The Core Pillars of Agentic AI Value

At Ankercloud, we engineer Agentic AI solutions tailored to address your most critical business challenges. These agents deliver value across distinct areas:

1. Autonomous Decision Agents

- Core Function: Accelerate decisions and deliver faster outcomes.

- Example Impact: Instantly approving loans based on credit models or dynamically adjusting pricing in e-commerce based on real-time demand.

2. Data Analytics Agents

- Core Function: Transform complex data into clear, predictive insights.

- Example Impact: Instantly summarizing large legal contracts, or providing conversational answers to complex finance queries without needing SQL.

3. Process Automation Agents

- Core Function: Remove repetitive tasks and eliminate human error.

- Example Impact: Fully automating HR onboarding (drafting contracts, provisioning access) or managing inventory by autonomously reordering stock.

4. Learning & Adaptive Agents

- Core Function: Continuously evolve workflows and outcomes based on new data and feedback.

- Example Impact: Fine-tuning a chatbot's responses after every customer interaction to improve accuracy, or optimizing a logistics route daily as it learns traffic patterns.

Multi-Agent Workflows: Collaboration for Complex Tasks

Real-world business problems are rarely solved by a single tool. Ankercloud specializes in building tailored multi-agent systems that think, act, and collaborate as a single intelligent unit. These systems allow specialized agents (e.g., LLMs, vision models, speech models) to coordinate holistically.

- Sequential Task Coordination: Agents pass outputs to each other in a pipeline. For example, sending an audio input to a speech agent for transcription, then forwarding the transcribed text to an LLM agent for summarization or translation.

- Parallel Task Execution: Multiple agents can work simultaneously on different subtasks, drastically reducing latency and increasing throughput.

- Dynamic Collaboration: Agents communicate, delegate, and synchronize with each other, sharing intermediate results and adjusting strategies in real time—mimicking a high-performing human team.

How Ankercloud Helps You Deploy Agentic AI

Transitioning to autonomous workflows requires specialized expertise to ensure security, scalability, and integration with your existing infrastructure. As an AWS/GCP Premier Partner, Ankercloud acts as your end-to-end architect and deployment partner:

- Cloud-Native Architecture: We leverage native services like Google Vertex AI Agents and AWS Bedrock Agents to build agents that are inherently secure, scalable, and cost-optimized within your multi-cloud environment.

- Workflow Orchestration: We design the underlying systems that allow your agents to collaborate using advanced protocols, ensuring seamless communication across databases and external APIs.

- Security & Governance: We embed Zero Trust principles and MLOps governance from the ground up, guaranteeing your agents operate reliably and compliantly, minimizing risk.

- Focus on ROI: We prioritize use cases that target your highest operational costs, ensuring the deployment of your agents delivers immediate, measurable Return on Investment (ROI).

Ready to Meet Your New Business Ally?

The future of work is not just assisted; it’s autonomous. By partnering with Ankercloud, you gain the expertise needed to deploy these next-generation autonomous workflows successfully.

Partner with Ankercloud to transform your operations with production-grade Agentic AI solutions.

2

Ethical Governance and Regulatory Compliance Solutions for Agentic AI

The Governance Imperative: Building Trust in Autonomous AI

The power of Agentic AI autonomous systems that make decisions and execute complex workflows comes with profound responsibility. For enterprises, deploying multi-agent systems requires a governance framework that moves beyond traditional model monitoring. It demands assurance that every autonomous action is transparent, fair, and legally compliant.

At Ankercloud, we provide comprehensive Agentic AI solutions designed to ensure ethical governance and regulatory compliance across these autonomous systems. Our framework integrates human oversight, intelligent monitoring, and policy-driven automation, empowering organizations to deploy AI responsibly while maintaining transparency, fairness, and accountability at every stage of the agentic workflow.

1. Ethical Governance Framework: Intelligence Embedded

Our governance model doesn't just review decisions; it embeds ethical intelligence directly into the fabric of agent workflows. This ensures that responsible principles are non-negotiable components of every autonomous action.

- Transparency & Explainability: Each agent records its decision logic and context, creating a clear audit trail. This ensures that every outcome, whether automated or escalated, is fully auditable for human review.

- Accountability: Supervisory agents monitor actions and escalate decisions requiring human validation. This creates a clear line of shared responsibility between the human team and the AI system.

- Fairness & Bias Mitigation: We implement continuous fairness assessments to detect and correct biases through automated bias-reporting agents and regular retraining cycles, ensuring equitable outcomes.

- Privacy Protection: Robust data governance policies enforce anonymization, secure transmission, and the principle of minimal data exposure during all inter-agent communication.

2. Regulatory Compliance Management: Global Legal Alignment

Navigating the fragmented global landscape of AI regulation is complex. Our solution simplifies this by building compliance into the core architecture, making your AI operations future-ready.

- Global Legal Alignment: Our Compliance agents ensure adherence to critical international standards, including GDPR, the EU AI Act, HIPAA, and ethical guidelines like those from the IEEE.

- Automated Policy Enforcement: Intelligent rule engines validate compliance in real time during key stages: data ingestion, model training, deployment, and post-production monitoring.

- Comprehensive Auditability: We maintain version-controlled logs and digital compliance dashboards, providing full visibility into model updates, decision outcomes, and risk assessments necessary for external audits.

3. Agentic Workflow Architecture: Governance as a Service

In our multi-agent ecosystem, governance is integrated directly into task execution through specialized, collaborative agents.

This architecture utilizes parallel processing to simultaneously evaluate ethics, compliance, and performance, ensuring that ethical clearance is a prerequisite for any task deployment.

4. Continuous Oversight & Improvement

To sustain compliance and ethical integrity in a constantly evolving environment, our systems feature ongoing monitoring and dynamic policy adaptation:

- Feedback Loops: Agents continuously self-assess and report ethical or regulatory anomalies for immediate review.

- Human-in-the-Loop Governance: Critical actions, high-impact decisions, and flagged cases are escalated to human reviewers, ensuring human judgment is applied where necessary.

- Adaptive Compliance Learning: Our systems are designed to evolve with new laws, standards, and enterprise governance frameworks through automated policy updates and model retraining.

Key Benefits

By partnering with Ankercloud, you gain:

- End-to-end ethical and regulatory assurance across multi-agent environments.

- Enhanced trust, transparency, and accountability in AI-driven decision-making.

- Real-time compliance validation through automated governance agents.

- A scalable, future-ready governance model adaptable to changing global AI regulations.

Ready to deploy autonomous AI with confidence and integrity?

Partner with Ankercloud to secure the ethical future of your Agentic AI systems.

Agentic AI Use Cases: Transforming Core Business Workflows for RealWorld ROI

The Shift: From Experimentation to Execution

Ready to ditch the manual bottlenecks and elevate your business with intelligent automation?

The next wave of AI isn't about isolated tasks, it's about orchestrating entire workflows. Dive into our latest use cases to see how Agentic AI is driving 90% reduction in manual data entry, cutting quote to execution time by 70%, and transforming product creation with Vision + GenAI Fusion. This is how you achieve real world ROI, not just potential.

The breakthrough lies in moving beyond simple chatbots and static machine learning models to deploy autonomous systems that solve complex, multi step business problems end to end. At Ankercloud, we are engineering these MultiAgent Workflows to drive profound efficiencies across the enterprise.

1. Intelligent Order Entry & Fulfilment Automation

Enterprises frequently lose time and accuracy translating customer purchase orders (POs) across various channels (email, PDF, voice) into their core ERP systems. Agentic AI automates this entire order lifecycle, from customer inquiry to final dispatch.

Solution: A MultiAgent Order Orchestration System

.png)

Outcome

90% reduction in manual data entry

End to end order processing automation

Integration ready with systems like SAP, Salesforce, and custom CRMs.

2. EnquirytoExecution Workflow (E2E Intelligent Process Automation)

The time lost between receiving a customer inquiry and beginning the final deliverable (e.g., quote -> approval -> project start) creates unnecessary human bottlenecks. Agentic AI now automates this "middle office" process.

Solution: A MultiAgent Process Automation System

%20%20(1).png)

Outcome

Cuts enquiry to execution time by up to 70%.

No human bottlenecks in approvals.

Ensures consistent quote and contract templates via GenAI.

3. Image Generation & 3D Model Conversion (Vision + GenAI Fusion)

Industries like jewelry, textiles, e-commerce, and architecture require fast, high quality asset creation (e.g., product photography -> 3D render -> virtual showroom). Agentic AI fuses Vision and Generative models to automate this content pipeline.

Solution: An AI Imageto3D Conversion Suite

%20%20(1).png)

Outcome

10$\times$ faster product modeling.

80% cost reduction in 3D asset creation.

Assets are metaverse / AR commerce ready.

4. Autonomous Quotation & Pricing Engine

Sales teams need dynamic quoting that reacts instantly to live market inputs (demand, margin, competition) but often get slowed down by manual approval loops. Agentic AI generates, optimizes, and approves quotes autonomously.

Solution: A MultiAgent Quoting System

.png)

Outcome

Enables real time dynamic pricing.

Accelerates sales closure and improves margin accuracy.

Reduced approval loops and human bottlenecks.

The Future of Work is Autonomous

These use cases demonstrate that Agentic AI is not just about isolated tasks; it’s about holistic workflow automation. By deploying multi agent systems, Ankercloud helps enterprises eliminate manual friction, reduce operational costs, and unlock unprecedented speed.

Ready to identify your first high ROI Agentic AI use case?

Partner with Ankercloud to transform your core business processes.

Your Trusted Ally in Intelligent Automation: Agentic AI

The Shift: From Reactive AI to Proactive Autonomy

For years, Artificial Intelligence has been a powerful tool, but one that required constant human direction. You built the model, but your team still had to manually prepare data, trigger processes, and interpret every output. This fragmentation created bottlenecks and limited scalability.

The new era of Agentic AI changes everything.

Agentic AI systems are designed not just to analyze, but to act autonomously. They orchestrate complex, multi-step workflows end-to-end, making decisions, collaborating with other systems, and continually learning to achieve a high-level goal—all without constant human oversight.

This shift transforms AI from a back-office tool into a proactive Business Ally that drives measurable results and unparalleled efficiency.

The Core Pillars of Agentic AI Value

At Ankercloud, we engineer Agentic AI solutions tailored to address your most critical business challenges. These agents deliver value across distinct areas:

1. Autonomous Decision Agents

- Core Function: Accelerate decisions and deliver faster outcomes.

- Example Impact: Instantly approving loans based on credit models or dynamically adjusting pricing in e-commerce based on real-time demand.

2. Data Analytics Agents

- Core Function: Transform complex data into clear, predictive insights.

- Example Impact: Instantly summarizing large legal contracts, or providing conversational answers to complex finance queries without needing SQL.

3. Process Automation Agents

- Core Function: Remove repetitive tasks and eliminate human error.

- Example Impact: Fully automating HR onboarding (drafting contracts, provisioning access) or managing inventory by autonomously reordering stock.

4. Learning & Adaptive Agents

- Core Function: Continuously evolve workflows and outcomes based on new data and feedback.

- Example Impact: Fine-tuning a chatbot's responses after every customer interaction to improve accuracy, or optimizing a logistics route daily as it learns traffic patterns.

Multi-Agent Workflows: Collaboration for Complex Tasks

Real-world business problems are rarely solved by a single tool. Ankercloud specializes in building tailored multi-agent systems that think, act, and collaborate as a single intelligent unit. These systems allow specialized agents (e.g., LLMs, vision models, speech models) to coordinate holistically.

- Sequential Task Coordination: Agents pass outputs to each other in a pipeline. For example, sending an audio input to a speech agent for transcription, then forwarding the transcribed text to an LLM agent for summarization or translation.

- Parallel Task Execution: Multiple agents can work simultaneously on different subtasks, drastically reducing latency and increasing throughput.

- Dynamic Collaboration: Agents communicate, delegate, and synchronize with each other, sharing intermediate results and adjusting strategies in real time—mimicking a high-performing human team.

How Ankercloud Helps You Deploy Agentic AI

Transitioning to autonomous workflows requires specialized expertise to ensure security, scalability, and integration with your existing infrastructure. As an AWS/GCP Premier Partner, Ankercloud acts as your end-to-end architect and deployment partner:

- Cloud-Native Architecture: We leverage native services like Google Vertex AI Agents and AWS Bedrock Agents to build agents that are inherently secure, scalable, and cost-optimized within your multi-cloud environment.

- Workflow Orchestration: We design the underlying systems that allow your agents to collaborate using advanced protocols, ensuring seamless communication across databases and external APIs.

- Security & Governance: We embed Zero Trust principles and MLOps governance from the ground up, guaranteeing your agents operate reliably and compliantly, minimizing risk.

- Focus on ROI: We prioritize use cases that target your highest operational costs, ensuring the deployment of your agents delivers immediate, measurable Return on Investment (ROI).

Ready to Meet Your New Business Ally?

The future of work is not just assisted; it’s autonomous. By partnering with Ankercloud, you gain the expertise needed to deploy these next-generation autonomous workflows successfully.

Partner with Ankercloud to transform your operations with production-grade Agentic AI solutions.

The Ankercloud Team loves to listen

.jpg)