Resources

The latest industry news, interviews, technologies and resources.

Quality Management in the AI Era: Building Trust and Compliance by Design

The Trust Test: Why Quality is the New Frontier in AI

When we talk about quality in AI, we're not just measuring accuracy; we're measuring trust. An AI model with 99% accuracy is useless or worse, dangerous if its decisions are biased, non-compliant, or can't be explained.

For enterprises leveraging AI in critical areas (from manufacturing quality control to financial risk assessment), a rigorous Quality Management system is non-negotiable. This process must cover the entire lifecycle, ensuring that the AI works fairly, securely, and safely - a concept often known as Responsible AI.

We break down the AI Quality Lifecycle into five essential stages, guaranteeing that quality is baked into every decision.

The 5 Stage AI Quality Lifecycle Framework

Quality assurance for AI systems must start long before the model is built and continue long after deployment:

1. Data Governance & Readiness

The model is only as good as the data it trains on. We focus on validation before training:

- Data Lineage & Labeling: Enforcing traceable protocols and dataset versioning.

- Bias Detection: Pre-model checks for data bias and noise to ensure representativeness across demographics or time segments.

- Secure Access: Enforcing anonymization and strict access controls from the outset.

2. Model Development & Validation

Building the model resiliently:

- Multi-Split Validation: Using cross-domain validation methods, not just random splits, to ensure the model performs reliably in varied real-world scenarios.

- Stress Testing: Rigorous testing on adversarial and out-of-distribution inputs to assess robustness.

- Evaluation Beyond Accuracy: Focusing on balanced fairness and robustness metrics, not just high accuracy scores.

3. Explainability & Documentation

If you can't explain it, you can't trust it. We prioritize transparency:

- Interpretable Techniques: Applying methods like SHAP and LIME to understand how the model made its decision.

- Model Cards: Generating comprehensive documentation that describes objectives, intended users, and, critically, model limitations.

- Traceable Logs: Maintaining clear logs for input features and versioned training artifacts for auditability.

4. Risk Assurance & Responsible AI Controls

This is the proactive safety net:

- Harm Assessment: Formal assessment of misuse risk (intentional and unintentional).

- Guardrail Policies: Defining non-negotiable guardrails for unacceptable use cases.

- Human-in-the-Loop (HITL): Implementing necessary approval gates for safety-critical or high-risk outcomes.

5. Deployment, Monitoring & Continuous Improvement

Quality demands perpetual vigilance:

- Continuous Monitoring: Real-time tracking of accuracy, model drift, latency, and hallucination rates in production.

- Safe Rollouts: Utilizing canary releases and shadow testing before full production deployment.

- Reproducibility: Implementing controlled retraining pipelines to ensure consistency and continuous compliance enforcement.

Cloud: The Backbone of Scalable, High-Quality AI

Attempting this level of governance and monitoring without hyperscale infrastructure is impossible. Cloud platforms like AWS and Google Cloud (GCP) are not just hosting providers; they are compliance enforcement engines.

Cloud Capabilities Powering Quality Management:

- ML Ops Pipelines: Automated, reproducible pipelines (using services like SageMaker or Vertex AI) guarantee consistent retraining and continuous improvement.

- Centralized Compute: High-performance compute and data lakes enable fast model testing and quality insights across global teams and diverse data sets.

- Auditability & Compliance: Tools like AWS CloudTrail / GCP Cloud Logging provide unalterable audit trails, while security controls (AWS KMS / GCP KMS, IAM) ensure private and regulated workloads are protected.

This ensures that the quality of AI outputs is backed by governance, spanning everything from software delivery to manufacturing IoT and customer interactions.

Ankercloud: Your Partner in Responsible AI Quality

Quality and Responsible AI are two sides of the same coin. A model with high accuracy but biased outcomes is a failure. We specialize in using cloud-native tools to enforce these principles:

- Bias Mitigation: Leveraging tools like AWS SageMaker Clarify and GCP Vertex Explainable AI to continuously track fairness and explainability.

- Continuous Governance: Integrating cloud security services for continuous compliance enforcement across your entire MLOps workflow.

Ready to move beyond basic accuracy and build AI that is high-quality, responsible, and trusted?

Partner with Ankercloud to achieve continuous, global scalable quality.

Beyond Dashboards: The Four Dimensions of Data Analysis for Manufacturing & Multi-Industries

The Intelligence Gap: Why Raw Data Isn't Enough

Every modern business - whether on a shop floor or in a financial trading room is drowning in data: sensor logs, transactions, sales records, and ERP entries. But how often does that raw data actually tell you what to do next?

Data Analysis bridges this gap. It's the essential process of converting raw operational, machine, supply chain, and enterprise data into tangible, actionable insights for improved productivity, quality, and decision-making. We use a combination of historical records and real-time streaming data from sources like IoT sensors, production logs, and sales systems to tell a complete story.

To truly understand that story, we rely on four core techniques that move us from simply documenting the past to confidently dictating the future.

The Four Core Techniques: Moving from 'What' to 'Do This'

Think of data analysis as a journey with increasing levels of intelligence:

- Descriptive Analytics (What Happened): This is your foundation. It answers: What are my current KPIs? We build dashboards showing OEE (Overall Equipment Effectiveness), defect percentage, and downtime trends. It’s the essential reporting layer.

- Diagnostic Analytics (Why It Happened): This is the root cause analysis (RCA). It answers: Why did that machine fail last week? We drill down into correlations, logs, and sensor data to find the precise factors that drove the outcome.

- Predictive Analytics (What Will Happen): This is where AI truly shines. It answers: Will this asset break in the next month? We use sophisticated time series models (like ARIMA or Prophet) to generate highly accurate failure predictions, demand forecasts, and churn probabilities.

- Prescriptive Analytics (What Should Be Done): This is the highest value. It answers: What is the optimal schedule to prevent that failure and meet demand? This combines predictive models with optimization engines (OR models) to recommend the exact action needed—such as optimal scheduling or smart pricing strategy.

Multi-Industry Use Cases: Solving Real Business Problems

The principles of advanced analytics apply everywhere, from the shop floor to the trading floor. We use the same architectural patterns—the Modern Data Stack and a Medallion Architecture—to transform different kinds of data into competitive advantage.

In Manufacturing

- Predictive Maintenance: Using ML models to analyze vibration, temperature, and load data from IoT sensors to predict machine breakdowns before they occur.

- Quality Analytics: Fusing Computer Vision systems with core analytics to detect defects, reduce scrap, and maintain consistent product quality.

- Supply Chain Optimization: Analyzing vendor risk scoring and lead time data to ensure stock-out prevention and precise production planning.

In Other Industries

- Fraud Detection (BFSI): Deploying anomaly and classification models that flag suspicious transactions in real-time, securing assets and reducing financial risk.

- Route Optimization (Logistics): Using GPS and route history data with optimization engines to recommend the most efficient routes and ETAs.

- Customer 360 (Retail/Telecom): Using clustering and churn models to segment customers, personalize retention strategies, and accurately forecast demand.

Ankercloud: Your Partner in Data Value

Moving from basic descriptive dashboards to autonomous prescriptive action requires expertise in cloud architecture, data science, and MLOps.

As an AWS and GCP Premier Partner, Ankercloud designs and deploys your end-to-end data platform on the world's leading cloud infrastructure. We ensure:

- Accuracy: We build robust Data Quality and Validation pipelines to ensure data freshness and consistency.

- Governance: We establish strict Cataloging & Metadata frameworks (using tools like Glue/Lake Formation) to provide controlled, logical access.

- Value: We focus on delivering tangible Prescriptive Analytics that result in better forecast accuracy, faster root cause fixing, and verifiable ROI.

Ready to stop asking "What happened?" and start knowing "What should we do?"

Partner with Ankercloud to unlock the full value of your enterprise data.

Data Agents: The Technical Architecture of Conversational Analysis on GCP

Conversational Analytics: Architecting the Data Agent for Enterprise Insight

The emergence of Data Agents is revolutionizing enterprise analytics. These systems are far more than just sophisticated chatbots; they are autonomous, goal-oriented entities designed to understand natural language requests, reason over complex data sources, and execute multi-step workflows to deliver precise, conversational insights. This capability, known as Conversational Analysis, transforms the way every user regardless of technical skill interacts with massive enterprise datasets.

This article dissects a robust, serverless architecture on Google Cloud Platform (GCP) for a Data Wise Agent App, providing a technical roadmap for building scalable and production-ready AI agents.

Core Architecture: The Serverless Engine

The solution is anchored by an elastic, serverless core that handles user traffic and orchestrates the agent's complex tasks, minimizing operational overhead.

Gateway and Scaling: The Front Door

- Traffic Management: Cloud Load Balancing sits at the perimeter, providing a single entry point, ensuring high availability, and seamlessly distributing incoming requests across the compute environment.

- Serverless Compute: The core application resides in Cloud Run. This fully managed platform runs the application as a stateless container, instantly scaling from zero instances to hundreds to meet any demand spike, offering unmatched cost efficiency and agility.

The Agent's Operating System and Mindset

The brain of the operation is the Data Wise Agent App, developed using a specialized framework: the Google ADK (Agent Development Kit).

- Role Definition & Tools: ADK is the foundational Python framework that allows the developer to define the agent's role and its available Tools. Tools are predefined functions (like executing a database query) that the agent can select and use to achieve its goal.

- Tool-Use and Reasoning: This framework enables the Large Language Model (LLM) to select the correct external function (Tool) based on the user's conversational query. This systematic approach—often called ReAct (Reasoning and Action)—is crucial for complex, multi-turn conversations where the agent remembers prior context (Session and Memory).

The Intelligence and Data Layer

This layer contains the powerful services the agent interacts with to execute its two primary functions: advanced reasoning and querying massive datasets.

Cognitive Engine: Reasoning and Planning

- Intelligence Source: Vertex AI provides the agent's intelligence, leveraging the gemini-2.5-pro model for its superior reasoning and complex instruction-following capabilities.

- Agentic Reasoning: When a user submits a query, the LLM analyzes the goal, decomposes it into smaller steps, and decides which of its tools to call. This deep reasoning ensures the agent systematically plans the correct sequence of actions against the data.

- Conversational Synthesis: After data retrieval, the LLM integrates the structured results from the database, applies conversational context, and synthesizes a concise, coherent, natural language response—the very essence of Conversational Analysis.

The Data Infrastructure: Source of Truth

The agent needs governed, performant access to enterprise data to fulfill its mission.

- BigQuery (Big Data Dataset): This is the serverless data warehouse used for massive-scale analytics. BigQuery provides the raw horsepower, executing ultra-fast SQL queries over petabytes of data using its massively parallel processing architecture.

- Generative SQL Translation: A core task is translating natural language into BigQuery's GoogleSQL dialect, acting as the ultimate Tool for the LLM.

- Dataplex (Data Catalog): This serves as the organization's unified data governance and metadata layer. The agent leverages the Data Catalog to understand the meaning and technical schema of the data it queries. This grounding process is critical for generating accurate SQL and minimizing hallucinations.

The Conversational Analysis Workflow

The complete process is a continuous loop of interpretation, execution, and synthesis, all handled in seconds:

- User Request: A natural language question is received by the Cloud Run backend.

- Intent & Plan: The Data Wise Agent App passes the request to Vertex AI (Gemini 2.5 Pro). The LLM, guided by the ADK framework and Dataplex metadata, generates a multi-step plan.

- Action (Tool Call): The plan executes the necessary Tool-Use, translating the natural language intent into a structured BigQuery SQL operation.

- Data Retrieval: BigQuery executes the query and returns the precise, raw analytical results.

- Synthesis & Response: The Gemini LLM integrates the raw data, applies conversational context, and synthesizes an accurate natural language answer, completing the Conversational Analysis and sending the response back to the user interface.

Ankercloud: Your Partner for Production-Ready Data Agents

Building this secure, high-performance architecture requires deep expertise in serverless containerization, advanced LLM orchestration, and BigQuery optimization.

- Architectural Expertise: We design and deploy the end-to-end serverless architecture, ensuring resilience, scalability via Cloud Run and Cloud Load Balancing, and optimal performance.

- ADK & LLM Fine-Tuning: We specialize in leveraging the Google ADK to define sophisticated agent roles and fine-tuning Vertex AI (Gemini) for superior domain-specific reasoning and precise SQL translation.

- Data Governance & Security: We integrate Dataplex and security policies to ensure the agent's operations are fully compliant, governed, and grounded in accurate enterprise context, ensuring the trust necessary for production deployment.

Ready to transform your static dashboards into dynamic, conversational insights?

Partner with Ankercloud to deploy your production-ready Data Agent.

Agentic AI Architecture: Building Autonomous, Multi-Cloud Workflows on AWS & GCP

The Technical Shift: From Monolithic Models to Autonomous Orchestration

Traditional Machine Learning (ML) focuses on predictive accuracy; Agentic AI focuses on autonomous action and complex problem-solving. Technically, this shift means moving away from a single model serving one function to orchestrating a team of specialized agents, each communicating and acting upon real-time data.

Building this requires a robust, cloud-native architecture capable of handling vast data flows, secure communication, and flexible compute resources across platforms like AWS and Google Cloud Platform (GCP).

Architectural Diagram Description

.png)

.png)

Visual Layout: A central layer labeled "Orchestration Core" connecting to left and right columns representing AWS and GCP services, and interacting with a bottom layer representing Enterprise Data.

1. Enterprise Data & Triggers (Bottom Layer):

- Data Sources: External APIs, Enterprise ERP (SAP/Salesforce), Data Lake (e.g., AWS S3 and GCP Cloud Storage).

- Triggers: User Input (via UI/Chat), AWS Lambda (Event Triggers), GCP Cloud Functions (Event Triggers).

2. The Orchestration Core (Center):

- Function: This layer manages the overall workflow, decision-making, and communication between specialized agents.

- Tools: AWS Step Functions / GCP Cloud Workflows (for sequential task management) and specialized Agent Supervisors (LLMs/Controllers) managing the Model Context Protocol.

3. Specialized Agents & Models (AWS Side - Left):

- Foundation Models (FM): Amazon Bedrock (access to Claude, Llama 3, Titan)

- Model Hosting: Amazon SageMaker Endpoints (Custom ML Models, Vision Agents)

- Tools: AWS Kendra (RAG/Knowledge Retrieval), AWS Lambda (Tool/Function Calling)

4. Specialized Agents & Models (GCP Side - Right):

- Foundation Models (FM): Google Vertex AI Model Garden (access to Gemini, Imagen)

- Model Hosting: GCP Vertex AI Endpoints (Custom ML Models, NLP Agents)

- Tools: GCP Cloud SQL / BigQuery (Data Integration), GCP Cloud Functions (Tool/Function Calling)

Key Technical Components and Function

1. The Autonomous Agent Core

Agentic AI relies on multi-agent systems, where specialized agents collaborate to solve complex problems:

- Foundation Models (FM): Leveraging managed services like AWS Bedrock and GCP Vertex AI Model Garden provides scalable, secure access to state-of-the-art LLMs (like Gemini) and GenAI models without the burden of full infrastructure management.

- Tool Calling / Function Invocation: Agents gain the ability to act by integrating with external APIs and enterprise systems. This is handled by Cloud Functions or Lambda Functions (e.g., AWS Lambda or GCP Cloud Functions) that translate the agent's decision into code execution (e.g., checking inventory in SAP).

- RAG (Retrieval-Augmented Generation): Critical for grounding agents in specific enterprise data, ensuring accuracy and avoiding hallucinations. Services like AWS Kendra or specialized embeddings stored in Vector Databases (like GCP Vertex AI Vector Search) power precise knowledge retrieval.

2. Multi-Cloud Orchestration for Resilience

Multi-cloud deployment provides resilience, avoids vendor lock-in, and optimizes compute costs (e.g., using specialized hardware available only on one provider).

- Workflow Management: Tools like AWS Step Functions or GCP Cloud Workflows are used to define the sequential logic of the multi-agent system (e.g., Task Agent $\rightarrow$ Validation Agent $\rightarrow$ Execution Agent).

- Data Consistency: Secure, consistent access to enterprise data is maintained via secure private links and unified data lakes leveraging both AWS S3 and GCP Cloud Storage.

- MLOps Pipeline: Continuous Integration/Continuous Delivery (CI/CD) pipelines ensure agents and their underlying models are constantly monitored, re-trained, and deployed automatically across both cloud environments.

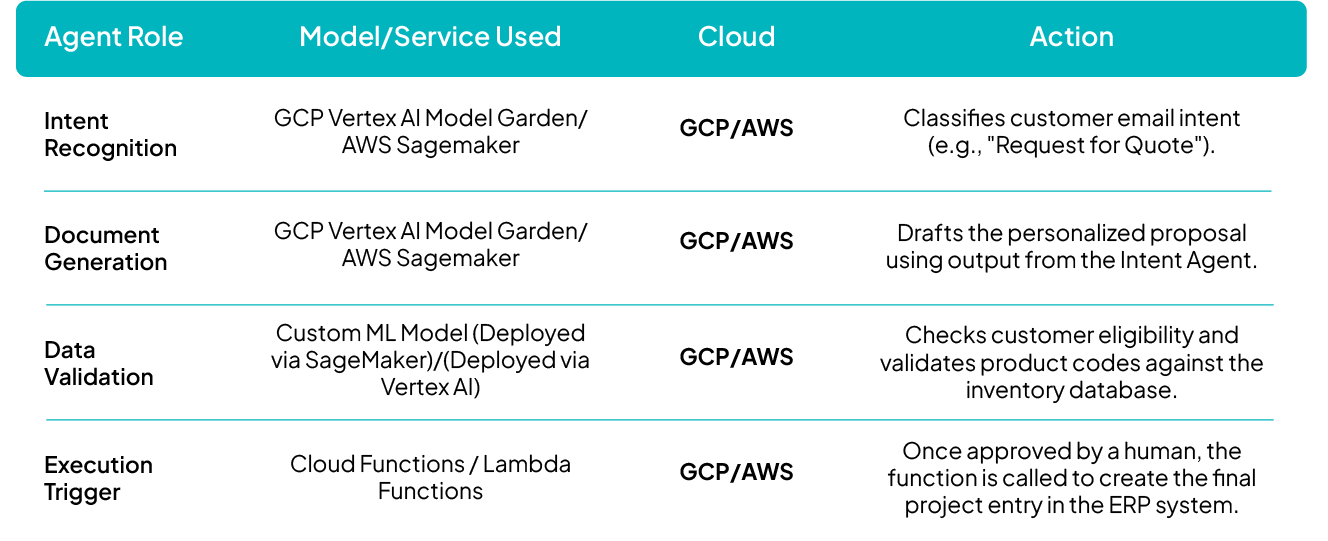

Real-World Use Case: Enquiry-to-Execution Workflow

To illustrate the multi-cloud collaboration, consider the Enquiry-to-Execution Workflow where speed and data accuracy are critical:

How Ankercloud Accelerates Your Agentic Deployment

Deploying resilient, multi-cloud Agentic AI is highly complex, requiring expertise across multiple hyperscalers and MLOps practices.

- Multi-Cloud Expertise: As a Premier Partner for AWS and GCP, we architect unified data governance and security models that ensure seamless, compliant agent operation regardless of which cloud service is hosting the model or data.

- Accelerated Deployment: We utilize pre-built, production-ready MLOps templates and orchestration frameworks specifically designed for multi-agent systems, drastically cutting time-to-market.

- Cost Optimization: We design the architecture to strategically leverage the most cost-efficient compute (e.g., specialized GPUs) or managed services available on either AWS or GCP for each task.

Ready to transition your proof-of-concept into a production-ready autonomous workflow?

Partner with Ankercloud to secure and scale your multi-cloud Agentic AI architecture.

IoT in Manufacturing: Powering the Smart Factory and Industry 4.0

The Factory of the Future: Your Digital Advantage is Now

Manufacturing is no stranger to revolution, but the current shift is fundamentally different. It's not just about faster machines; it's about intelligent data. The integration of the Internet of Things (IoT) is reshaping how industries operate, paving the way for the era of Smart Manufacturing and Industry 4.0.

IoT acts as the key enabler, bridging the physical world of machinery and assets with the digital world of software and analytics. By connecting machines, systems, and people through intelligent data exchange, IoT transforms factories into dynamic, responsive environments capable of real-time decision-making, predictive insights, and continuous improvement.

IoT: The Engine Behind Industry 4.0 Transformation

The Smart Manufacturing Vision hinges entirely on seamless connectivity. IoT creates a connected ecosystem across your entire operation, offering end-to-end visibility that was previously impossible.

Key Pillars of the IoT-Driven Smart Factory

- Smart Factory Connectivity: IoT provides the nervous system for your factory. It connects every piece of equipment, system, and sensor, creating agile and responsive factory operations with complete, real-time visibility across production environments and supply chains.

- Efficiency & Productivity: IoT supports deep automation, allowing for real-time process monitoring and data-driven optimization. This directly enhances productivity and operational performance by eliminating guesswork and tuning processes continuously.

- Integration with Cloud, AI & Analytics: The true power lies in the fusion. Combining IoT data streams with powerful cloud platforms, artificial intelligence (AI), and machine learning creates intelligent, adaptive, and self-optimizing production systems that learn and adjust without human intervention.

Driving Measurable ROI: The Core Benefits of Connected Assets

Adopting IoT delivers tangible benefits that hit your bottom line, transforming traditional reactive maintenance and quality control into proactive, intelligent operations.

Predictive Maintenance: Zero Unplanned Downtime

IoT sensors constantly monitor the health of critical assets—vibration, temperature, pressure, and sound. IoT-driven analytics help you prevent equipment failures, minimize costly unplanned downtime, and significantly extend the lifecycle of your most valuable machinery. You fix problems before they happen.

Quality & Traceability: Guaranteeing Excellence

Continuous data collection through IoT enables higher product quality standards. By monitoring conditions at every stage, you can achieve early defect detection and maintain complete traceability from raw materials to finished goods—a crucial capability for regulatory compliance and customer trust.

Sustainability: A Greener Production Footprint

IoT contributes directly to sustainable manufacturing goals. By giving you granular data on consumption, you can identify and reduce waste, optimize resource and energy consumption, and support environmentally conscious production practices, aligning your operations with modern ESG mandates.

Human Impact: Safety and Collaboration

IoT is improving the work environment. It enhances workplace safety with connected wearables and automated safety alerts. It also boosts collaboration by enabling remote monitoring capabilities, allowing specialists to diagnose and assist globally without needing to be physically present.

Ankercloud: Your Partner for the Industry 4.0 Journey

Moving to a Smart Factory requires more than just installing sensors; it demands a robust, secure, and scalable cloud architecture ready for massive data ingestion and AI processing.

At Ankercloud, we design the full IoT solution lifecycle to maximize your business value:

- Resilient Data Pipelines: We engineer high-capacity data pipelines for seamless ingestion, processing, and storage of real-time sensor data at scale.

- Interactive Dashboards & Alerts: We build interactive dashboards for live operational insights and implement automated alerting and notification systems to ensure rapid incident response.

- Mobile Accessibility: Our solutions are mobile-enabled to keep remote teams, site managers, and maintenance crews informed and actionable on the go.

- KPI and Performance Modules: We translate raw data into measurable improvements, defining KPIs and performance modules that clearly track gains in productivity and efficiency.

- Enterprise Integration: To maximize ROI visibility, we integrate seamlessly with existing enterprise systems (e.g., ERP, CRM, SCADA) enabling real-time ROI visibility, cost-performance analysis, and strategic decision-making across production and operations.

Ready to connect your assets and unlock the power of real-time intelligence?

Partner with Ankercloud to accelerate your Industry 4.0 transformation.

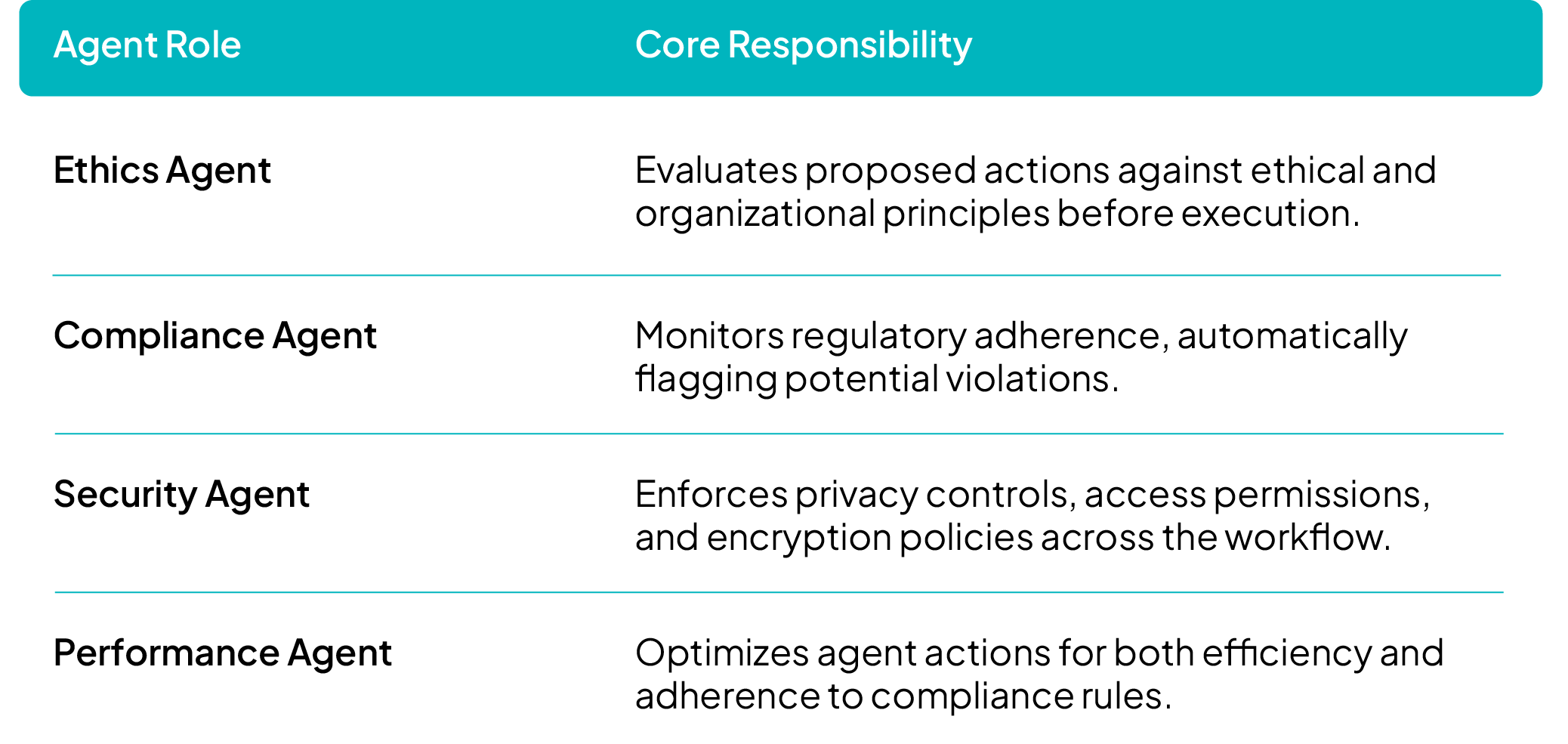

Ethical Governance and Regulatory Compliance Solutions for Agentic AI

The Governance Imperative: Building Trust in Autonomous AI

The power of Agentic AI autonomous systems that make decisions and execute complex workflows comes with profound responsibility. For enterprises, deploying multi-agent systems requires a governance framework that moves beyond traditional model monitoring. It demands assurance that every autonomous action is transparent, fair, and legally compliant.

At Ankercloud, we provide comprehensive Agentic AI solutions designed to ensure ethical governance and regulatory compliance across these autonomous systems. Our framework integrates human oversight, intelligent monitoring, and policy-driven automation, empowering organizations to deploy AI responsibly while maintaining transparency, fairness, and accountability at every stage of the agentic workflow.

1. Ethical Governance Framework: Intelligence Embedded

Our governance model doesn't just review decisions; it embeds ethical intelligence directly into the fabric of agent workflows. This ensures that responsible principles are non-negotiable components of every autonomous action.

- Transparency & Explainability: Each agent records its decision logic and context, creating a clear audit trail. This ensures that every outcome, whether automated or escalated, is fully auditable for human review.

- Accountability: Supervisory agents monitor actions and escalate decisions requiring human validation. This creates a clear line of shared responsibility between the human team and the AI system.

- Fairness & Bias Mitigation: We implement continuous fairness assessments to detect and correct biases through automated bias-reporting agents and regular retraining cycles, ensuring equitable outcomes.

- Privacy Protection: Robust data governance policies enforce anonymization, secure transmission, and the principle of minimal data exposure during all inter-agent communication.

2. Regulatory Compliance Management: Global Legal Alignment

Navigating the fragmented global landscape of AI regulation is complex. Our solution simplifies this by building compliance into the core architecture, making your AI operations future-ready.

- Global Legal Alignment: Our Compliance agents ensure adherence to critical international standards, including GDPR, the EU AI Act, HIPAA, and ethical guidelines like those from the IEEE.

- Automated Policy Enforcement: Intelligent rule engines validate compliance in real time during key stages: data ingestion, model training, deployment, and post-production monitoring.

- Comprehensive Auditability: We maintain version-controlled logs and digital compliance dashboards, providing full visibility into model updates, decision outcomes, and risk assessments necessary for external audits.

3. Agentic Workflow Architecture: Governance as a Service

In our multi-agent ecosystem, governance is integrated directly into task execution through specialized, collaborative agents.

This architecture utilizes parallel processing to simultaneously evaluate ethics, compliance, and performance, ensuring that ethical clearance is a prerequisite for any task deployment.

4. Continuous Oversight & Improvement

To sustain compliance and ethical integrity in a constantly evolving environment, our systems feature ongoing monitoring and dynamic policy adaptation:

- Feedback Loops: Agents continuously self-assess and report ethical or regulatory anomalies for immediate review.

- Human-in-the-Loop Governance: Critical actions, high-impact decisions, and flagged cases are escalated to human reviewers, ensuring human judgment is applied where necessary.

- Adaptive Compliance Learning: Our systems are designed to evolve with new laws, standards, and enterprise governance frameworks through automated policy updates and model retraining.

Key Benefits

By partnering with Ankercloud, you gain:

- End-to-end ethical and regulatory assurance across multi-agent environments.

- Enhanced trust, transparency, and accountability in AI-driven decision-making.

- Real-time compliance validation through automated governance agents.

- A scalable, future-ready governance model adaptable to changing global AI regulations.

Ready to deploy autonomous AI with confidence and integrity?

Partner with Ankercloud to secure the ethical future of your Agentic AI systems.

Agentic AI Use Cases: Transforming Core Business Workflows for RealWorld ROI

The Shift: From Experimentation to Execution

Ready to ditch the manual bottlenecks and elevate your business with intelligent automation?

The next wave of AI isn't about isolated tasks, it's about orchestrating entire workflows. Dive into our latest use cases to see how Agentic AI is driving 90% reduction in manual data entry, cutting quote to execution time by 70%, and transforming product creation with Vision + GenAI Fusion. This is how you achieve real world ROI, not just potential.

The breakthrough lies in moving beyond simple chatbots and static machine learning models to deploy autonomous systems that solve complex, multi step business problems end to end. At Ankercloud, we are engineering these MultiAgent Workflows to drive profound efficiencies across the enterprise.

1. Intelligent Order Entry & Fulfilment Automation

Enterprises frequently lose time and accuracy translating customer purchase orders (POs) across various channels (email, PDF, voice) into their core ERP systems. Agentic AI automates this entire order lifecycle, from customer inquiry to final dispatch.

Solution: A MultiAgent Order Orchestration System

.png)

Outcome

90% reduction in manual data entry

End to end order processing automation

Integration ready with systems like SAP, Salesforce, and custom CRMs.

2. EnquirytoExecution Workflow (E2E Intelligent Process Automation)

The time lost between receiving a customer inquiry and beginning the final deliverable (e.g., quote -> approval -> project start) creates unnecessary human bottlenecks. Agentic AI now automates this "middle office" process.

Solution: A MultiAgent Process Automation System

%20%20(1).png)

Outcome

Cuts enquiry to execution time by up to 70%.

No human bottlenecks in approvals.

Ensures consistent quote and contract templates via GenAI.

3. Image Generation & 3D Model Conversion (Vision + GenAI Fusion)

Industries like jewelry, textiles, e-commerce, and architecture require fast, high quality asset creation (e.g., product photography -> 3D render -> virtual showroom). Agentic AI fuses Vision and Generative models to automate this content pipeline.

Solution: An AI Imageto3D Conversion Suite

%20%20(1).png)

Outcome

10$\times$ faster product modeling.

80% cost reduction in 3D asset creation.

Assets are metaverse / AR commerce ready.

4. Autonomous Quotation & Pricing Engine

Sales teams need dynamic quoting that reacts instantly to live market inputs (demand, margin, competition) but often get slowed down by manual approval loops. Agentic AI generates, optimizes, and approves quotes autonomously.

Solution: A MultiAgent Quoting System

.png)

Outcome

Enables real time dynamic pricing.

Accelerates sales closure and improves margin accuracy.

Reduced approval loops and human bottlenecks.

The Future of Work is Autonomous

These use cases demonstrate that Agentic AI is not just about isolated tasks; it’s about holistic workflow automation. By deploying multi agent systems, Ankercloud helps enterprises eliminate manual friction, reduce operational costs, and unlock unprecedented speed.

Ready to identify your first high ROI Agentic AI use case?

Partner with Ankercloud to transform your core business processes.

Your Trusted Ally in Intelligent Automation: Agentic AI

The Shift: From Reactive AI to Proactive Autonomy

For years, Artificial Intelligence has been a powerful tool, but one that required constant human direction. You built the model, but your team still had to manually prepare data, trigger processes, and interpret every output. This fragmentation created bottlenecks and limited scalability.

The new era of Agentic AI changes everything.

Agentic AI systems are designed not just to analyze, but to act autonomously. They orchestrate complex, multi-step workflows end-to-end, making decisions, collaborating with other systems, and continually learning to achieve a high-level goal—all without constant human oversight.

This shift transforms AI from a back-office tool into a proactive Business Ally that drives measurable results and unparalleled efficiency.

The Core Pillars of Agentic AI Value

At Ankercloud, we engineer Agentic AI solutions tailored to address your most critical business challenges. These agents deliver value across distinct areas:

1. Autonomous Decision Agents

- Core Function: Accelerate decisions and deliver faster outcomes.

- Example Impact: Instantly approving loans based on credit models or dynamically adjusting pricing in e-commerce based on real-time demand.

2. Data Analytics Agents

- Core Function: Transform complex data into clear, predictive insights.

- Example Impact: Instantly summarizing large legal contracts, or providing conversational answers to complex finance queries without needing SQL.

3. Process Automation Agents

- Core Function: Remove repetitive tasks and eliminate human error.

- Example Impact: Fully automating HR onboarding (drafting contracts, provisioning access) or managing inventory by autonomously reordering stock.

4. Learning & Adaptive Agents

- Core Function: Continuously evolve workflows and outcomes based on new data and feedback.

- Example Impact: Fine-tuning a chatbot's responses after every customer interaction to improve accuracy, or optimizing a logistics route daily as it learns traffic patterns.

Multi-Agent Workflows: Collaboration for Complex Tasks

Real-world business problems are rarely solved by a single tool. Ankercloud specializes in building tailored multi-agent systems that think, act, and collaborate as a single intelligent unit. These systems allow specialized agents (e.g., LLMs, vision models, speech models) to coordinate holistically.

- Sequential Task Coordination: Agents pass outputs to each other in a pipeline. For example, sending an audio input to a speech agent for transcription, then forwarding the transcribed text to an LLM agent for summarization or translation.

- Parallel Task Execution: Multiple agents can work simultaneously on different subtasks, drastically reducing latency and increasing throughput.

- Dynamic Collaboration: Agents communicate, delegate, and synchronize with each other, sharing intermediate results and adjusting strategies in real time—mimicking a high-performing human team.

How Ankercloud Helps You Deploy Agentic AI

Transitioning to autonomous workflows requires specialized expertise to ensure security, scalability, and integration with your existing infrastructure. As an AWS/GCP Premier Partner, Ankercloud acts as your end-to-end architect and deployment partner:

- Cloud-Native Architecture: We leverage native services like Google Vertex AI Agents and AWS Bedrock Agents to build agents that are inherently secure, scalable, and cost-optimized within your multi-cloud environment.

- Workflow Orchestration: We design the underlying systems that allow your agents to collaborate using advanced protocols, ensuring seamless communication across databases and external APIs.

- Security & Governance: We embed Zero Trust principles and MLOps governance from the ground up, guaranteeing your agents operate reliably and compliantly, minimizing risk.

- Focus on ROI: We prioritize use cases that target your highest operational costs, ensuring the deployment of your agents delivers immediate, measurable Return on Investment (ROI).

Ready to Meet Your New Business Ally?

The future of work is not just assisted; it’s autonomous. By partnering with Ankercloud, you gain the expertise needed to deploy these next-generation autonomous workflows successfully.

Partner with Ankercloud to transform your operations with production-grade Agentic AI solutions.

Agentic AI in Action: Driving Real-World ROI with Ankercloud

The Agentic AI Shift: From Passive Models to Proactive Impact

For years, Artificial Intelligence promised transformation, but often required constant human oversight to manage models, stitch together workflows, and validate data. That era is over.

Agentic AI represents the true breakthrough: a new class of intelligent systems designed to act autonomously, orchestrating complex, multi-step tasks end-to-end. At Ankercloud, we specialize in cloud and machine learning solutions as a premier partner for AWS and GCP. Over the last year, we’ve been actively building Agentic AI-powered solutions for our clients, helping them reduce costs, accelerate operations, and unlock new value from their existing infrastructure.

We don’t just talk about potential; we deliver proven impact.

Ankercloud’s Impact: Agentic AI Across the Enterprise

The true power of Agentic AI is its versatility. By focusing on workflow automation, our agents are driving tangible return on investment (ROI) across traditionally labor-intensive business units:

HR & Workforce Management

Agentic AI is eliminating repetitive HR tasks, allowing teams to focus on strategy and employee experience.

- Automated Drafting: Agents draft routine emails, job descriptions, and offer letters, cutting down on manual paperwork.

- Timesheet Automation: Agents log employee hours, track delays, and update core HR systems directly, minimizing administrative errors.

- Onboarding Assistants: New hires are guided through policies, training, and compliance checks by interactive, personalized assistants.

Social Media & Marketing

We are helping marketing teams scale content generation while maintaining quality and audience relevance.

- Content Generation Agents: These agents create short, engaging reels and clips optimized for user engagement, dramatically increasing the speed of your content pipeline.

Legal & Compliance

For firms buried under documentation, Agentic AI is a game-changer for speed and risk management.

- Document Evaluation & Summarization: AI agents evaluate and summarize complex assets, contracts, and compliance documents in a fraction of the time.

- Knowledge Assistants: Using RAG (Retrieval-Augmented Generation) over internal policies, specialized chatbots can instantly answer complex compliance queries, reducing legal consultation time.

Interior Design & Architecture

Agentic AI is moving beyond data processing to unlock new frontiers of creativity and customer engagement.

- Photorealistic Mockups: Generative agents produce photorealistic interior mockups based on client products, recreating their exact specifications in imaginative settings and with high accuracy.

- Styling Recommendations: RAG-powered chatbots recommend styles, furniture, and materials from client-supplied catalogs, subtly tying in brand consistency with fluid user control.

Why Choose Ankercloud for Agentic AI?

Implementing Agentic AI successfully requires deep expertise across cloud infrastructure, security, and machine learning operations (MLOps).

- Proven Cloud Partnership: As a premier partner of AWS and GCP, we ensure your Agentic AI solutions are securely hosted, scalable, and fully optimized for cost and performance within your existing multi-cloud environment.

- Focus on ROI: We don't just build agents; we engineer autonomous workflows that directly target and reduce your highest operational costs (e.g., HR administration, content creation, compliance review).

- End-to-End Delivery: Our experience spans the entire lifecycle, ensuring your Agentic AI initiatives move seamlessly from concept to production, guaranteeing reliability and measurable business impact.

Ready to harness the power of autonomous workflows to reduce costs and unlock new value?

Partner with Ankercloud to transform your operations with production-grade Agentic AI solutions.

Saasification and Cloud Migration for vitagroup: a key player in the highly-regulated German Healthcare sector

Enhancing DDoS Protection with Extended IP Block Duration Using AWS WAF Rate-Based Rules

.png)

Smart Risk Assessment: Bitech’s AI-Driven Solution for Property Insurance

Streamlining CI/CD: A Seamless Journey from Bitbucket to Elastic Beanstalk with AWS CodePipeline

.png)

Setting Up Google Cloud Account and Migrating Critical Applications for Rakuten India

gocomo Migrates Social Data Platform to AWS for Performance and Scalability with Ankercloud

Benchmarking AWS performance to run environmental simulations over Belgium

Model development for Image Object Classification and OCR analysis for mining industry

Optimizing Cloud Infrastructure: How Former03 Achieved Operational Excellence with AWS

Please Type Other Keywords

The Ankercloud Team loves to listen

.png)

.png)